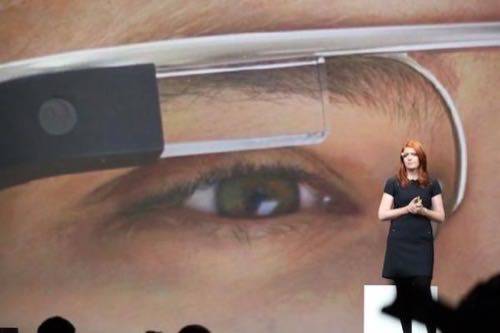

Wearable computing is poised to hit the mainstream. Last week, Google demoed its Project Glass prototype, an Android-powered display, camera, GPS locator and Internet node built into a bulky pair of glasses. On Thursday, Olympus announced its own Terminator specs. They may look silly, but they’re the beginning of a new era of computing.

Olympus’ MEG4.0 connects to smartphones and other devices via Bluetooth, and it displays data and imagery directly on its lenses. It’s comparable to what Google demoed last week, except Google Glass packs more computing power and has a camera built in. Olympus’ product has a limited feature set compared to Google’s and, as VentureBeat argues, is notably uglier. More important than the feature-for-feature comparison, however, is what both products represent in the broader context of how humans interact with computers.

Let’s be honest. Even if Google’s computer glasses are prettier than Olympus’, neither one will enhance your outfit like a pair of Oakleys.

No doubt, a select group of early adopters will snatch up these nerd goggles. They’ll surely be spotted on the sidewalks of San Francisco. Outside of the Silicon Valley area, though, the first person to show up to a local watering hole wearing these things can expect to face stares, if not outright mockery. Such is the nature of being an early adopter in the very early stages of a new era in which humans begin to merge with machines.

Not only do they look bizarre, but these gadgets raise a host of social questions. Cell phone addiction is already an issue for many people, often to the point of ignoring companions in favor of peering into tiny screens. When the screens hover over our eyes, does the line between the virtual and physical worlds become sharper or blurrier? What happens to our attention spans?

As with other advances in personal technology, this one will evolve depending on where and how it’s integrated into our lives. We’re still figuring this out with smartphones and tablets. For wearable displays, use cases such as navigation and on-the-go communication seem obvious and appropriate. But what about social situations? At the office? The dinner table?

An Awkward Moment, But Still a Pivotal Era

Even if it’s awkward, we’re entering a new and important era in human-machine interaction. Wearable computing joins voice control and gesture-based interfaces in revolutionizing how people interact with data, services and content.

Google’s awe-inspiring demo last week was the first step toward achieving social acceptance of this type of technology. The next steps will necessarily involve the slow proliferation of such devices, as well as refinements in the products themselves. The more these things can be made to look like normal glasses, the more likely your average consumer will consider wearing them. Although there’s no hard evidence that it will happen, if Apple dabbles in this space, the design problem may solve itself.

The questions raised by the new era we appear poised to enter are important – and we’ll figure them out in due time – but one of those questions is not “Is this going to happen?” It is happening, and it’s probably only the next step of many toward a sci-fi-seeming world in which the line between people and gadgets becomes harder and harder to distinguish.