GPS-based Augmented Reality is great for a mobile game like Ingress, but it won’t help you fix your car.

New software from PAR Works has a visual take on Augmented Reality, bringing the benefits of the concept to an entirely new class of applications. It might even work its way into gaming, too.

Current implementations of AR usually tie into your phone or tablet’s location features (GPS, compass, etc.) to determine exactly what to show you. In some cases, like an interactive subway map, this is exactly what you need, but there are times when you want something more precise – or times when location doesn’t matter at all.

Companies like Aurasma have brought some really interesting AR applications to 2D picture recognition, and they have the potential to transform marketing.

But we live in a 3D world. Imagine being able to tag 3D objects and have your overlays viewable from any angle. That’s what PAR Works claims its Mobile Augmented Reality Solution (MARS) can do.

What MARS Does

The video above simulates an application an automaker might ship with its cars. Open the hood, start your camera and tap anywhere for support information. Turn the phone upside-down, lean in from the side, or take a shot from beneath the car, and it still works.

There are plenty of applications for this sort of technology, usually centered around large, fixed objects that could be viewed from multiple angles. Imagine a virtual tour for an installation like the Space Shuttle Endeavor that worked from any angle and any distance. Or a construction site that allowed workers to pull specific schematics with a click, regardless of whether they were two blocks away or inside the building.

How MARS Works

MARS’ bread-and-butter is the way it translates 2D images to 3D point cloud models (the type you normally get when you put something through a 3D scanner).

Creating a MARS overlay goes like this:

- Upload 20-30 2D images of a building, object or location, taken from different angles.

- In about 2-3 minutes, MARS renders that into a 3D model. This happens on the back end, and you never see the model.

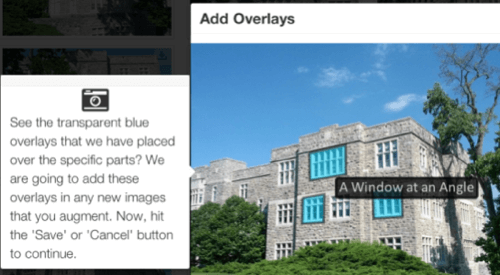

- Choose one or more of your 2D images and tag as many hot spots as you want with URLs or other data.

- MARS applies those zones to its model, and the user can now view AR content from any angle.

MARS’ Limitations – And Its Potential

For industrial and commercial uses, it’s a great idea. For most consumer apps, including gaming, it’s pretty limited on its own. In an online scavenger hunt, for example, seers could fake out the system by snapping a picture of a photograph. And if you wanted to create a MARS-enabled version of something like Ingress, good luck uploading 20 or more images for each of your thousands of portals.

Of course, the MARS system isn’t designed to stand alone. Developers still have access to all of the phone’s other functionality, so they could choose to combine location-based services with PAR Works’ visual model. A scavenger hunt or a “sniper” game might require visual confirmation plus physical proximity within a viewing distance, allowing users to find creative ways of targeting a tough-to-find objective.

A tourist app might query your general location, then pull down all visual maps matching “Times Square” so you can search for restaurants by storefront. And for that Ingress-like game, you could always distribute the load, letting users upload photos and create their own hot zones.

MARS’ Future?

MARS certainly won’t be a cure-all for all developers’ AR ills, but it looks like it might be a powerful tool. Until January 31, 2013, PAR Works is running a $25,000 developer contest, and it has some 250 coders in the program. It will be interesting to see where developers take the MARS platform.