Yesterday we held our first live chat on the changing nature of virtual storage. With me on the chat line were Wen Yu, an infrastructure technologist from VMware and several folks from NetApp including Jean Banko, a product marketing manager; Vaughn Stewart, the director of cloud computing and an active blogger; and Julian Cates, a virtualization systems and storage architect. We had a lively discussion that ranged across several topics and I will try to distill the highlights for you in this post. Of course, you are welcome to scroll through the chat yourself below.

Storage Management

A lot of the topics were concerned with storage management, and part of that is figuring out what is the right size for your storage repository. Cates said, “If you don’t know the right size datastore to deploy, it helps if you deploy to an infrastructure that allows for dynamic online resizing in either direction.” Good point. Stewart mentioned three keys to figuring this out:

- The ability for the storage admin to create and publish storage resource pools (or service catalogs)

- Admins should be able to consume and manage the published storage resources on demand

- The platform must provide a means to automatically respond to unexpected changes in a shared and virtualized workload

NAS or SAN?

Should your first storage array be network attached (NAS) or virtual (SAN)? Panelists had a few choice words to say on that, and also about products (such as from NetApp) that allow you to migrate from one architecture to another. Cates, for example, says “There may be some low end virtualization projects that don’t absolutely require these features (like vMotion, DRS, HA, SRM, etc.) or the performance and resiliency that shared storage brings.”

Part of any modern SAN these days is the ability to do thin provisioning, basically to overcommit storage resources. Our panel disagreed as to how this is implemented and its advantages. “When thin provisioning is enabled, be sure to set the appropriate threshold to send any warning when the physical volumes are running near capacity,” said Yu.

But Stewart cautioned, “Thin provisioning suffers from the storing of data that has been deleted in the file systems of Guests (VMs) and datastores. vSphere 5 will eliminate this ‘storage overhead’ when a VM is removed from a VMFS datastore and NetApp’s VSC vCenter plug-in eliminate this ‘storage overhead’ in the file system of the guest. Today we support space reclamation of data stored in NTFS in Windows VMs stored on NFS datastores.”

Scripting tools

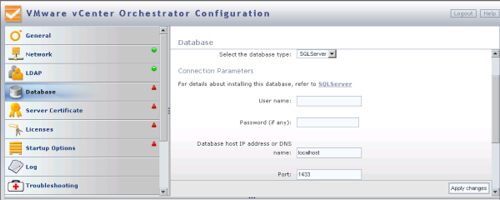

One question came from a reader interested in scripting tools to make storage management easier. Yu said, “Both VMware and NetApp have open public API/framework for infrastructure automation. If you are looking to invest in scripting solutions, then I’d recommend for you to take a look at orchestration solutions that have been integrated into the VMware and NetApp solutions. For example: Cloupia, Newscale, and the latest vCenter Orchestrator solution (shown below) that has a NetApp storage provisioning plugin.”

We took several quick polls during the course of the chat and the results were interesting. Most (62%) of the chat participants have implemented deduplication technologies or plan to do so (15%) in the near future. And more people have virtualized individual servers that their entire network infrastructure to connect them. Finally, when we asked what management tools people were using, “none” was a very popular answer, which was surprising — or perhaps not, given that many people were on the chat line to learn more about these tools.

Thanks for everyone for participating in the chat, and we’ll be doing more of these next month so watch for our announcements.