On Friday, a massive outage occured at Amazon Web Services that generated a wave

of negativity and criticism in the blogosphere. Not long ago, Rackspace, one of the world’s largest hosting companies, experienced a outage that resulted in a similar reaction. When the backbone collapses, so do our favorite services. This makes

us mad. It makes us say things like: well, maybe we shouldn’t be using the cloud. Or things

like: why can’t we get 99% uptime? Or: isn’t this what an SLA is for?

Software and hardware, like any system, can never be perfect. When power outages happen we get frustrated, but we understand that this is a fact of life. Any sufficiently complex

system, like a power grid or Amazon Web Services, is bound to go down. There is little that can be done

to assure us that it never will. Because of this, single outages are not good measures of quality of service.

Albert Wenger said something to me recently that stuck in

my head: We live in a stochastic world, but people fail to grasp it because all they experience is right now.

So is it really true – is cloud computing a bad idea? Of course not. It is a wonderful,

powerful idea. In this post, we explore the ideas behind cloud computing and argue that

it will be an integral part of our future.

Clouds vs. LAMPs

This generation of web services got their start from LAMP – a stack of simple,

yet powerful technologies that to this day is behind a lot of popular web sites. The beauty of LAMP is in its simplicity; it makes it very easy to get a

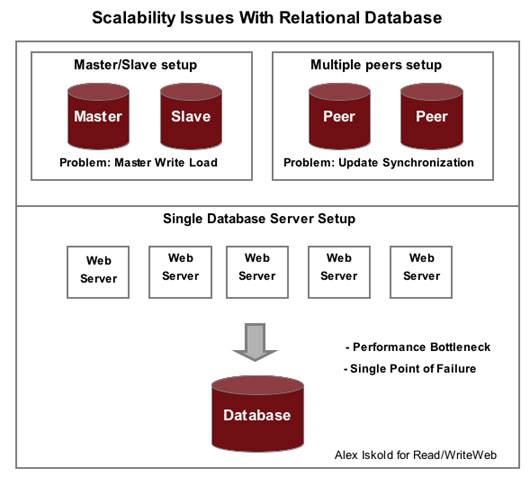

prototype out the door. The problem with LAMP is in its scalability. The first scalability issue is fairly minor – threads and socket connections

of the Apache web server. When load increases and configuration is not tuned properly you might run into problems. But the

second problem with LAMP is far more significant: the MySQL relational database is the ultimate bottleneck of the system.

Relational Databases are just not good at growing beyond a certain capacity because of the way they represent information.

And so when you reach a certain scale, they become difficult to manage. A way around it is a technique called data partitioning.

If it is possible to split your data into N independent sets, then you can scale with the LAMP approach indefinitely. But if

this is not the case, then your only way is to abandon the relational database for a distributed one. And this is the path

through which you break into the clouds.

The Basics of Cloud Computing

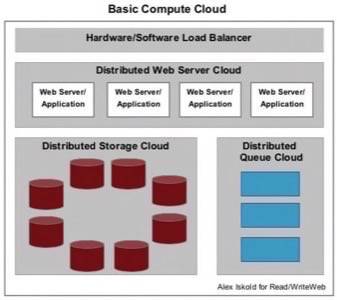

The idea behind cloud computing is simple – scale your application by deploying it

on a large grid of commodity hardware boxes. Each box has exactly the same system installed and

behaves like all other boxes. The load balancer forwards a request to any one box and it is processed

in a stateless manner; meaning the request is followed by an immediate response and no state is held by the system.

The beauty of the cloud is in its scalability – you scale by simply adding more boxes.

In the diagram above, the compute cloud consists of three basic elements: a web server/application layer, a distributed storage layer, and a distributed queue layer. Each one of these layers is a cloud itself – meaning that boxes

are all identical and perform the same function. In the simplest scenario, the web tier is the same as the bits in the LAMP stack.

The web server can still be Apache and it can be running PHP code – the application. The fundamentally different bit is the database,

which is no longer MySQL, but instead a distributed storage system like Amazon S3,

Amazon SimpleDB,

or Amazon Dynamo. The queue piece is optional, but it is needed in

cases when real-time handling is impossible or not necessary.

The real advantage of the cloud is its ability to support on demand business computing. An application written

to run on the cloud scales from 1,000 users to 10,000 and then to 10,000,000 just by expanding the number of boxes.

From a business perspective this is very attractive because it is easy to calculate growth and scalability costs.

Do Clouds Really Work?

You bet! The best example is Google. The king of the web is reigning with a farm of hundreds of thousands,

if not millions of boxes. To race along with the web, Google constantly increases the size of its cloud, incorporating

new web sites, and expanding its index.

Of course, Google isn’t the only one operating in a cloud. All major web players including Amazon, eBay, Yahoo! and Facebook are running

some sort of massive computing cloud. Amazon in particular has been perfecting the art for the past fifteen years.

The company has world class expertise and top notch talent in distributed computing, led by CTO Werner Vogels.

Obviously, it is not an accident that Amazon is making a major bet and launching into the web services infrastructure vertical.

They believe that clouds will be the future of computing, that they can make a business out of it, and that they can do

it better than you can do it on your own.

You vs. Them

Every time we have an outage, like the one that happened on Friday, people sit back and think: How can I possibly

rely on these guys? I bet I can just code this up myself and it will be fine! For decades the software industry has been

suffering from the ‘I can do this better’ disease. We keep re-inventing programming languages, we keep on re-writing the APIs,

and we keep thinking that we’re smarter than the guys who came before us. 99.9% of the time we are wrong. The truth is that we cannot do it better than Amazon. They spent a massive amount of money,

talent and most importantly time, trying to solve this problem. To think that this can be replicated by a startup in a matter

of months, assembled, be cost effective, and work properly is just absurd. Large-scale computing is an enormously complex problem, that takes

even the best and brightest engineers years to get right.

In this day and age, build vs. buy is a complete no-brainer, especially for startups. Whatever is part of your

core business you build. Everything else you buy. If your business really does require a custom cloud solution, then you have to build it.

But the chances that the Amazon Web Services stack, once fully built out, would not fit your needs are slim to none. By focusing on what truly

makes you unique and different you have the chance to beat the competition. Otherwise, if you keep on reinventing the wheel you won’t have the time and resources to advance your real product.

SLA vs. Common Sense

But maybe last week’s failure is not about clouds but about SLAs (Service Level Agreements)? If the SLA says that you will be up 99.99% of the time, then how can you go down for 3 hours? But here’s the truth about SLAs. Whatever they say, they still don’t mean that the

service is not going to go down. You can’t prevent power grid outages and you cannot prevent cloud outages. You can take all

the precautions and backups, but still you cannot be completely certain that failure would not occur. First order catastrophes happen.

So the problem is that we should not be looking at the SLA, but instead we need to consider common sense. It is not a single failure of the system

that is indicative of the performance. It is the frequency of failures that we should look for. If AWS goes down once a year each year for 3 hours, then it is nothing short of cloud computing paradise. If this happens every quarter, it’s alarming, every month – unacceptable. The point is,

as Albert Wegner explained, we need to think about this stochastically.

Yes, it is difficult for people to be off the grid. Yes, it is difficult to explain to our users why we are down. But we need

to be transparent about our abilities here – we are all humans working as hard as we can to make things work. Everybody gets that.

The crux of the problem is transparency.

Any company that wants to own our hearts, ignite our imaginations, and power the next generation of our computing infrastructure

needs to be transparent. If there is a problem, come out and say it. Put the sign up: “we are working hard on fixing it.”

Email the developers: “hey guys, something is seriously wrong on our end, we are investigating and will keep you posted.” Transparency and openness from infrastructure providers, from the company all the way down to the consumer, is the key

to having piece of mind. Because everyone knows that when we apply ourselves in a genuine and passionate way, there are no problems

that we cannot solve – the cloud will be up and running shortly.

The Future: Through the Clouds into the Sky

The incident last week is in no way going to deter cloud computing or even Amazon Web Services.

We are witnessing a fundamental shift in our ability to compute and this is just the beginning.

Amazon is at the forefront of making massively parallel, web scale compute services available to the world.

Free from the need to solve the scalability problems, startups are able to focus on the specific problems that their product or service is trying to solve.

All of this is happening while the cost of hardware, bandwidth and services overall keep dropping.

Truly, we are reaching for the sky through the computing clouds.