It’s called engagement, it’s the catchphrase behind one of ReadWriteWeb’s top technologies of the year, and it’s emerging as one of the latest conceptual technologies on the floor of CES 2012 this week. In the back of ad executives’ minds during Salesforce.com’s introduction of Radian6 last year, there must have been cogs turning and wheels spinning about how viewers tweeting each other could be leveraged as an engagement tool.

Specifically, what if people tweeting about a show became integrated into the show? Could servers mining those tweets conceivably glean viewer reactions? And could engaging with the various tweeters lead to a new and more direct way to deliver ads?

One way or the other, every year since well back into the ’90s, CES has tried to make “the future” about the set-top box (STB) – the device you use to change your channels. Despite the rise of the Web as a content delivery tool, most every advance in interactive technology has, at some point, been leveraged as a potential channel-changer for a CES demo. This year is absolutely no exception.

We’ve seen voice interaction before, especially Nuance’s Dragon speech recognition software, which made headway last year for Android and was thought to become competitive for iOS until Apple unveiled Siri. This year, Nuance (which acquired Swype last October) is introducing Dragon to makers of TV firmware, at LVCC South 2, booth MP25356.

One of the demos CES attendees are likely to see involves TV viewers using Dragon speech recognition to tweet from their chairs. Imagine speaking into a nearby microphone into a service that generates the necessary abbreviations and hashtags for you.

In an effort to attract developers, last September, Nuance made its NDEV SDK available free. And last month, to help clear the way for itself, Nuance acquired Vlingo, which makes a multi-platform virtual assistant mobile app that’s competitive with Apple’s Siri, and whose voice patent portfolio was once in direct competition with Nuance itself. Last year at CES, Vlingo was one of the names that came up in discussion about the use of mobile voice recognition technologies to control media devices.

So what we’re seeing is a pairing, in lieu of an outright convergence, between mobile technology and STB controllers. It may still take years to integrate live, broadband-speed connectivity into STBs and into HDTVs themselves. But where that upstream bandwidth is lacking, smartphones could fill in the gap. The result would be a platform for engaging TV viewers in interactive apps that get their live feedback, that get them talking with one another, and that provide servers with data that can be leveraged for ratings and analytics purposes – not just, “What are you watching?” but “Do you like it?”

Couple this with the emergence of a new viewer engagement platform called yap.TV. It’s been trying to make a name for itself by converting iPads and iPhones into fully-interactive remote controls. In order for an app to provide real-time feedback to an iOS device that’s in sync with the feed from the TV, it would be nice to be able to listen to the audio from that feed.

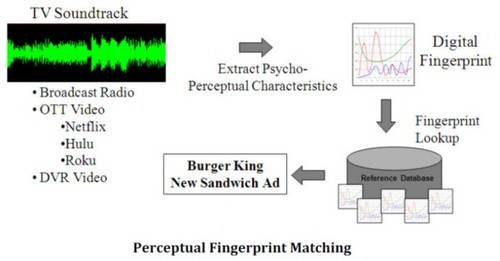

So today, yap.TV said it will be partnering with a firm called Audible Magic (Bellini Ballroom – 73507), which develops audio fingerprinting applications for identifying TV programs. The idea is to enable apps coming in from another platform (for instance, from a mobile phone, or inside an HDTV whose content is being supplied by an outside STB) to identify the program currently playing, so it can communicate its ID back to the server.

We’ve seen Audible Magic at CES before – in fact, just last year in a similar-sounding partnership with a firm called Trickplay. This time, AM is looking to make further deals, not just with companies in yap.TV’s space, but also with manufacturers of service provider-hosted equipment such as STBs.

Diagram of one use case for Audible Magic content recognition (CR) technology. From an Audible Magic white paper, June 2011.

An Audible Magic white paper (PDF available here) explains a multitude of use cases. Here’s one: “A best-selling suspense novelist is being interviewed on TV. A viewer is watching the show ‘time-shifted’ and doesn’t view the commercials. Yet an app on the smart TV uses [content recognition] to recognize the program and offers to take the viewer to an ‘Amazon-like’ experience – clicking to read additional reviews of the book, comments from other fans, and perhaps the novel’s first couple of pates all posted on a box on the TV screen. Then, if still interested, the viewer clicks to authorize a ‘One-Click Buy,’ automatically paying online for the book and the shipping.”

What will likely emerge from all this collaboration is some type of social engagement platform that, either directly through your remote or indirectly through your smartphone, links viewers who talk to each other while the show’s on (which used to be a bad habit) with a service that mines these communications for data. That service can then respond to this data with in-sync advertisements, perhaps with promotions (“You said you like what Britney’s wearing! Would you like $10 off?”) and, conceivably, surveys (“What do you like best about Coke Zero?”) Already, yap.TV has made deals with content providers such as USA Network (part of NBCUniversal), for what’s being called “second-screen experiences” in conjunction with shows such as “Psych” and “Burn Notice.”

Audible Magic suggests that such a platform could give advertisers a rational alternative to placing a growing number of ads on an expanding number of vertical channels. Audience fragmentation, it suggests, is making direct TV ads less attractive since it can only reach a very limited, targeted audience. An alternative, interactive ad platform could act like buckshot, scattering any number of ads to a broader base of viewers. “Eventually,” the company says, “all the devices we use to watch and interact with video may have some form of content recognition technology embedded in them.”