Maybe you’ve wanted to control your big-screen TV with your smartphone for years, even though the idea has been a nonstarter for most of that time. Now Microsoft, which insists that it sees large-screen computing devices playing a dominant role in the home and workplace, says it will make that a reality.

On Tuesday, Microsoft kicked off TechFest, a research fair of sorts where the company’s engineers emerge from their darkened labs and reveal their notion of the tech future. And perhaps more important, how we’re going to get there.

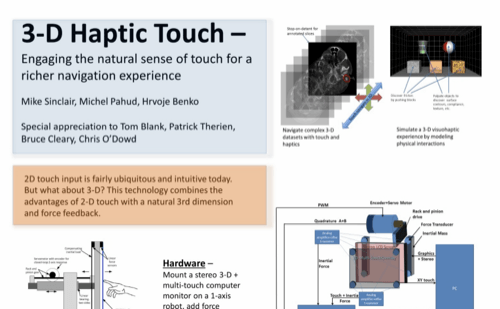

Over twenty projects will be on display, including older exhibits such as one that lets you animate household objects using your body. One of the most significant presentations came from senior researcher Michel Pahud, who showed off how users could interact with large-screen displays, either directly or using their phone.

Why is this important? Consider the following concept video, which shows how Microsoft imagines users interacting with massive interactive displays projected onto walls and ceilings. It’s a showcase for what Microsoft calls “natural user interfaces,” or ways of interacting with computers via touch, voice and gestures instead of a keyboard. (Microsoft has authored similar videos before, such as the “smart glass” concept from 2009 and a similar video in 2011 that showcased holograms.)

The problem with controlling displays such as TVs via smartphone is that the phone is usually sitting right next to a remote control — a purpose-built, and often superior, device.

Buying and installing a wall-sized display might seem ludicrous at the moment, but let’s bear with Microsoft for a moment — this is the future, after all. What Pahud’s video shows us, though, is how close this future is to reality.

Some of what Pahud describes may seem familiar. Placing a finger on the screen opens a “palette” of available options next to it, similar to the radial menu that Microsoft included in its OneNote application. If the user spreads his fingers, the palette expands to include new options. Part of this could be enabled with a touch display; alternatively, a Kinect sensor could also be used to “see” how a user is actually interacting with the display.

When a user approaches with a Windows smartphone, the phone syncs with the display. When the user is close to the display, the phone shows the palette options. Farther away, the phone shifts into a “remote control” mode, presenting a keyboard and allowing the user to search and control via his voice.

“So in conclusion we have been looking at the strengths of the large display, the strengths of the phone, and combine them together as a society of appliances,” Pahud said.

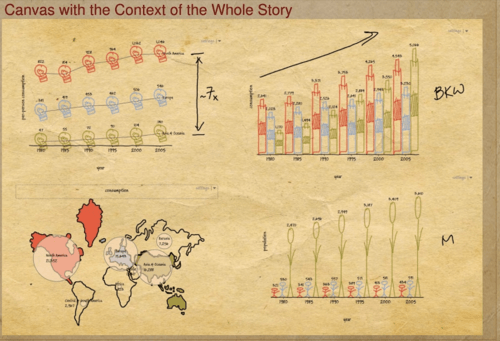

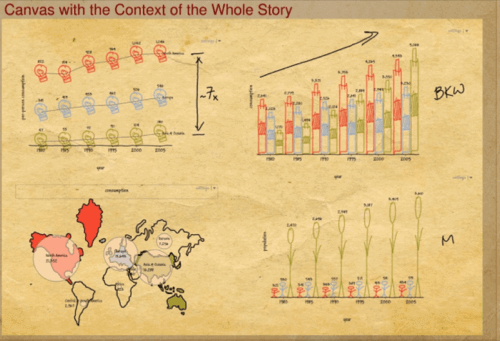

A second video, authored by Microsoft researcher Bongshin Lee, takes the concept of “palette” in a different direction. By drawing an “L” on the display, the SketchInsight technology concept draws a graph; writing the labels for the X and Y axes not only assigns values to both, but also begins filling in the data (from a predetermined source, I assume). In a nifty trick, drawing a battery icon populates the graph with the appropriate data, also using the elongated icon as a the element of a bar graph.

Lee’s video isn’t nearly as impressive as Pahud’s demonstration, if only because the source of the data is never really made clear, nor is how the data should be bounded. Creating a pie chart merely by drawing a circle is a nice touch, however, and shows how data can be herded into the appropriate format using the appropriate tool.

Microsoft also presented research papers on:

Adaptive machine learning. As a front-end tool, this is a bit difficult to conceptualize. Microsoft showed off several examples of machine learning, ranging from the relatively trivial — using machine learning to decide the category of a business expense — to the more profound, such as using a manufacturing profile to determine whether a semiconductor wafer was defective or not.

Analyzing viral content. Much as you would expect, Microsoft’s research showed that “viral” content doesn’t originate from a single source, but is spontaneously shared by a number of influencers, whose content ripples across the online sphere. But Microsoft researcher Jake Hofman also developed a tool that would help analyze the “clout” of individuals on the Web, and track the virality of content they share.

The Kinect handgrip. While this may seem relatively trivial, Microsoft views the ability to close one’s hand into a fist — the “handgrip” — as the gestural equivalent of a mouse click, and the company said that it would be supported in future versions of the Kinect SDK.

Microsoft’s TechFest doesn’t necessarily mean that these products will come to market and be built into next-generation Microsoft-branded products. But it’s a good indication that this is the direction the company is headed.

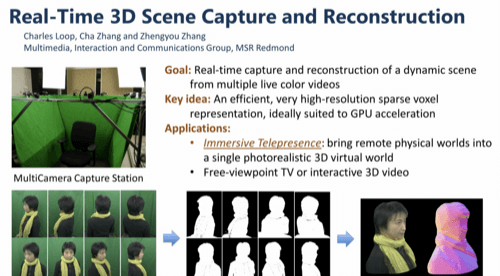

Here are some more images from TechFest:

icon, and SketchInsight automatically completes the chart by synthesizing data from

example sketches. SketchInsight also enables the presenter to interact with the data charts.

Lead image courtesy of Microsoft