An international team of computer scientists has created software that lets anyone perform on-the-fly analysis of live streaming video on the iPhone. Used alongside existing methods of displaying data on top of the camera’s view, this new functionality signals a fundamental change in the kinds of Augmented Reality (AR) that iPhone developers can create. Existing AR apps, like Yelp, Layar, Wikitude and others display data on top of a camera’s view but don’t actually analyze what the camera sees. This new development changes that.

The iPhone has a private API for analysis of live-streaming video but developers’ requests that it be made accessible haven’t been granted by Apple. The new software opening up access to that API was made freely available to anyone this morning by the team that built it.

The Visual Media Lab at Ben Gurion University in collaboration with HIT Lab NZ wrote the code in question and unveiled it along with video demonstrations at the AR-specialist blog Games Alfresco today. The unveiling comes just days before the International Symposium on Mixed and Augmented Reality in Orlando, Florida.

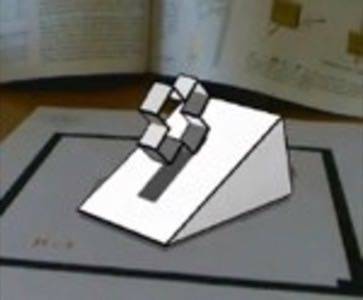

In a demonstration video the team showed how software built on top of the now-exposed API could look at a 2D image drawn on paper and render the image in 3D. Then the 3D rendering is subjected to a physics simulation.

This is of course just one use-case. Video AR-enabled software could do almost anything in direct response to the actual images seen through the iPhone’s camera view, in real time. Image processing locally will be easier and faster than comparison with a large number of related images, something that would likely require some connection to the cloud, but these are early days.

GamesAlfresco author Ori Inbar calls this the dawn of an era of “user-generated Augmented Reality.”

For the first time ever, the core code necessary for real augmented reality (“real” here means precise alignment of graphics overlaid on real life objects) on iPhone 3.0 is available to the public

How will Apple respond? That’s a big question; the company has had an ambivalent relationship with the emerging field of Augmented Reality so far and exercises infamously obtuse control over applications distributed through its app store.

For now the code is being distributed for its creators by Ori Inbar, whose email address to request it is available at the conclusion of his coverage on GamesAlfresco.

The possibilities here are huge. While location-based AR is clumsy at best so far, due to the imprecise nature of GPS and mapping data, these kinds of object-centric AR tied to the actual viewed world open up a whole new world of potential developments. Let’s see what you’ve got, AR devs of the world!