ThinkContacts is a new mobile application being developed by Nokia which would allow a disabled person to select a contact from a list and place a phone call to that person using only their mind. The app, which is designed for Nokia’s N900 Maemo platform, works with an accompanying headset that reads the user’s brainwaves to measure attention levels. If the attention level is higher than 70%, the software scrolls to the next contact in the list. If the attention level is higher than 80%, the software makes a phone call to the selected contact.

It’s one of the latest developments in making accessible smartphone applications for the disabled, the blind and deaf.

The ThinkContacts app, recently discovered by our friends at The Next Web, could be an incredible tool for motor-disabled persons, allowing them the independence to perform what for most of us is a simple task: making a phone call.

At present, the app is still somewhat basic, but its development can be tracked here on Nokia’s site. You can also see the app in action in this YouTube video.

Reading about ThinkContacts got us wondering about what other applications are out there for the disabled. While searching for more information on this subject, we came across this news story regarding a University of Washington class project to build accessible apps for Android and iPhone users.

In Richard Ladner’s “Accessibility Capstone” class at UW, students worked in teams to create new applications that would permit disabled persons to accomplish tasks they wouldn’t otherwise be able to do. The open-source apps the students are creating take advantage of modern smartphone features, like its GPS and camera, in order to provide new tools to the disabled.

The current projects include the following:

Barcode Reader (Android)

This project, an audio reader built off the zxing project, uses a combination of the camera on the phone, computer vision, and focalization (semi-autonomous camera focalization for blind people – i.e., assisting the user as to where to point the camera given the environment and goals). The app can then “scan” barcodes and provide product information from a remote server that is spoken aloud over the phone’s speakers. Code Repository

Color Namer (Android)

This is program which allows the user to determine the color of an object by pointing the phone’s camera at it and tapping the screen. The app tries to select one color from the 11 common color names of English. The students are currently testing different algorithms to choose a color that best describes what the camera sees, including a classification tree and a statistical model. These models will be trained using data collected from the phone’s own camera. Code Repository

Location Orienter (Android)

This project uses text to speech software and the Android location services to determine the users’ current location and speak the approximate address. It is currently being expanded to provide other navigational information, such as walking directions, and present information through a non-audio interface. Code Repository

LocalEyes (Android)

Current GPS devices that are accessible for blind and low-vision users tend to be expensive and highly specialized. LocalEyes uses less expensive, multi-purpose smart phone technology and freely available data to provide an accessible GPS application that will increase a user’s independence and ability to explore new places alone by telling the user what businesses are ahead and behind them.

ezTasker (Android)

The main goal of this project is to assist people with cognitive disabilities through daily activities with visual and audio aid. It also allows caretakers to monitor the user remotely, and users and caretakers to add their own personalized tasks using images, audio and video.Code Repository

MobileOCR (Android)

Mobile OCR uses a powerful optical character recognition (OCR) engine to provide low vision and blind individuals with a way to read printed text. The user is completely guided through tactile and audio feedback. Text is output using a text-to-speech engine in conjunction with a screen reader. The screen reader is controlled by simple gestures. Mobile OCR, in addition to being accessible, is completely open source and provides a great framework to build off of.Code Repository

Braille Buddies (Android)

Teaching Braille to blind and low-vision children at a young age increases their chances of earning advanced degrees and finding employment in the future. This project seeks to provide a novel way of delivering Braille instruction to these children. The students built a fun and educational game called BrailleBuddies for blind and low-vision users, integrating Braille reading and writing exercises into the backdrop of the game. The students developed BrailleBuddies to run on mobile devices, taking advantage of the portability and pervasive use of these devices within our society. Code Repository

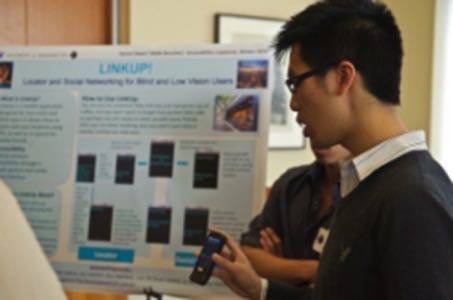

LinkUp! (Android)

Social networking websites and applications have become a staple of modern life for sighted people, yet very little has been done to make this accessible to blind and low vision users. LinkUp! is an application which allows blind and low vision users to know where they are, as well as query the locations of friends in the area and store their favorite places.Code Repository

Haptic Braille Perception (Cross-Platform Technology)

The students have developed a way to haptically present Braille characters on a mobile phone touch screen with the phone’s vibrator, called V-Braille. Studies so far have shown that with minimal training, V-Braille can be used to read individual characters and sentences. There is a potential for using a standard mobile phone with deaf-blind users who rely solely on tactual perception to receive information. The students are also currently testing this with Braille games listed above with blind and low-vision children in elementary and middle school.Code Repository

FocalEyes (Cross-Platform Technology)

The students on this project are using computer vision and user input for semi-autonomous camera focalization for blind people (i.e., assisting the user as to where to point the camera given the environment and goals).

Audio Tactile Graphics (Cross-Platform Technology)

Using a digital pen, this project would add audio to images to greatly increase the accessibility of tactile images while reducing the cost and production time.

Interface for One Bus Away (Android)

The students are creating an Android application that provides an interface to the One Bus Away system, usable by blind and deaf-blind users. The app uses GPS to determine the bus stop closest to the user, which is easier than reading the stop number off the posted schedule for a blind user. The application is also being tied to a Braille display. Code Repository

Mobile Developers, Take Note!

To all the mobile application developers who think that there are no new ideas left for mobile apps, take note. There’s an untapped market of smartphone users – the disabled. And there aren’t as many mobile applications catering to their needs as there should be.

If you’re a developer thinking about getting into this space, be sure to check out Google’s own eyes-free project and YouTube channel, which offers speech enabled “eyes-free” Android applications. Other resources to take note of include Wikia’s Open Letter Initiative and those listed at the bottom of Ladner’s Capstone description page.

Image credit, top: Komo News