Slack has reportedly been using customer data to power its machine-learning (ML) features, such as improving search result relevance and ranking. This has sparked criticism due to confusing policy updates, which led many to think their data was being used to train AI models.

As per the company’s policy, anyone who wishes to opt out must request their organization’s Slack admin to email the company to stop the use of their data.

The revelation comes as Corey Quinn, an executive at Duckbill Group, wrote on X asking, “I’m sorry Slack, you’re doing f**king WHAT with user DMs, messages, files, etc?”

![(Tweet from Corey Quinn and Slack's Response):Corey Quinn (@QuinnyPig) tweeted on May 16: "I'm sorry Slack, you're doing [expletive] WHAT with user DMs, messages, files, etc? I'm positive I'm not reading this correctly." Attached is a screenshot of Slack's opt-out instructions for their machine learning models. Slack (@SlackHQ) responded: "Hello from Slack! To clarify, Slack has platform-level machine-learning models for things like channel and emoji recommendations and search results. And yes, customers can exclude their data from helping train those (non-generative) ML models. Customer data belongs to the customer. We do not build or train these models in such a way that they could learn, memorize, or be able to reproduce some part of customer data. Our privacy principles applicable to search, learning, and AI are available here: [link]." Another response from Slack (@SlackHQ) on May 17: "Slack AI – which is our generative AI experience natively built in Slack – is a separately purchased add-on that uses Large Language Models (LLMs) but does not train those LLMs on customer data. Because Slack AI hosts the models on its own infrastructure, your data remains in [link]."](https://readwrite.com/wp-content/uploads/2024/05/Screenshot-2024-05-21-125728.png)

Another extract reads: “To opt out, please have your org, workspace owners or primary owner contact our Customer Experience team at [email protected].”

Slack quickly responded to the post, confirming that it is using customer content to train certain AI tools in the app. However, they clarified that this data is not used for their premium AI offering, which they state is completely separate from user information.

The cloud-based team communication platform also told TechRadar Pro that the information it uses to power its ML is anonymized and does not access messages.

The president of the end-to-end encrypted messaging app Signal, Meredith Whittaker, criticized Slack for using the data. She said on X: “Signal will never do this. We don’t collect your data in the first place, so we don’t have anything to ‘mine’ for ‘AI’.”

PSA: Signal will never do this ❤️😇

We don’t collect your data in the first place, so we don’t have anything to “mine” for “AI” https://t.co/DMyuaTFP33

— Meredith Whittaker (@mer__edith) May 17, 2024

Slack responds to criticism over use of user data to train models

In response to the community uproar, the Salesforce-owned company posted a separate blog addressing the concerns, stating: “We do not build or train these models in such a way that they could learn, memorize, or be able to reproduce any customer data of any kind.”

Slack also confirmed that user data is not shared with third-party LLM providers for training purposes.

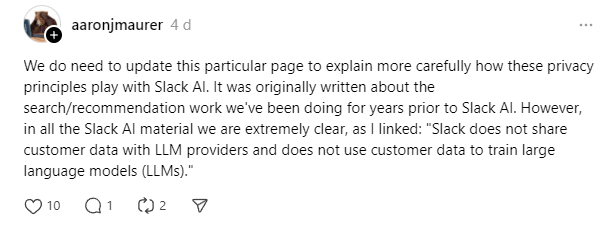

A Slack engineer attempted to clarify the situation on Threads, explaining that the privacy rules were “originally written about the search/recommendation work we’ve been doing for years prior to Slack AI,” and admitting that they do “need an update.”

Featured image: Canva