If the social media shaming of racist teens over the past few weeks has revealed anything conclusive, it’s this: There’s a large amount of hate speech floating around on Twitter.

One reason could be that Twitter actually doesn’t have a hate speech clause in its Terms of Service. Twitter lists the following No-Nos on its platform: Child Pornography, Brand and Trademark Complaints, Breach of Privacy, Copyright Complaints, Harassment and Violent Threats Policy, Impersonation, Name Squatting, and Reposting Other’s Content without Attribution… but nothing on hate speech.

How Do You Report Twitter Offenses?

If you wanted to report someone on Twitter for violating any of those offenses, you must use a Web form, and you are given only the following options:

- Someone on Twitter is posting my private information.

- Someone on Twitter is stealing my Tweets.

- Someone on Twitter is posting offensive content.

- Someone on Twitter is sending me abusive messages.

- Someone on Twitter is sending me violent threats.

None of these options specifically cover hate speech, although “offensive content,” might qualify.

More to the point, just how effective is this Web form any way?

Not very.

In 2008, a man who was clearly violating Twitter’s Terms of Service by sending harrassing messages – including ones threatening bodily harm – to a Buddhist religious leader, was not banned by Twitter, despite the victim taking it to court. “We’ve reviewed the matter and decided it’s not in our best interest to get involved,” said Twitter CEO Jack Dorsey of the matter, reported Wired.

Great. So what’s the point of having a Terms of Service if the company won’t even enforce them?

Keeping Up With YouTube?

YouTube, meanwhile, has its own built-in community enforce its Terms of Service, and it does a substantially better job of enforcing the guidelines and terms of service. YouTube, in fact, even has a hate speech clause written in its community guidelines, which reads:

“We encourage free speech and defend everyone’s right to express unpopular points of view. But we don’t permit hate speech (speech which attacks or demeans a group based on race or ethnic origin, religion, disability, gender, age, veteran status, and sexual orientation/gender identity).”

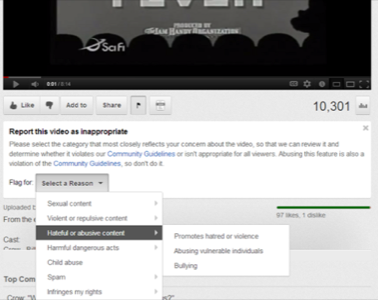

When a user engages in hate speech on YouTube via a video, they can be flagged by the community. Once a video is flagged, staff at YouTube review the video – someone is reviewing flagged videos 24 hours a day, with staff in multiple time zones. If YouTube determines the video does in fact violate community guidelines, the video is then removed, and “serious or repeated violations can lead to account termination.”

Reporting someone on YouTube is very easy and just requires a click of a button in the shape of a flag, as well as selecting the proper drop-down menu.

YouTube’s comment system – long stereotyped as being a cesspool of the Internet – does not offer options related to hate speech, but does allow users to downvote comments or report comments for spam. Once an offensive comment has received enough down-votes, it becomes hidden in the comments section: again, a form of community policying.

If Twitter were to adopt a flagging system like YouTube has with videos, it might be able to more-effectively communicate to teens that their hate speech is not acceptable on the platform. This type of policy would also, hopefully, dissuade adults from cyber-bullying teens or engaging in vigilante trolling of minors, as the platform would already be reprimanding the teen for their reprehensible behavior.

Photo courtesy of Shutterstock.