There are many reasons to love delicious and hope that it survives its current rocky patch, but as a programmer there’s one thing I’ve found it essential for. I often write applications that need to process and organize thousands or millions of Web pages.

To do that, I need to know something about their meaning, what topics they’re associated with, if they’re blogs, political, technical, commercial, and what other categories they fall into. One way is run an API like Zemanta or OpenCalais on the pages’ text, and hope to use significant terms to pick categories. This is an extremely intensive process on large collections, and even the best semantic analysis is nowhere near as good as a human summary. What if you could get millions of people to categorize the pages for you, for free?

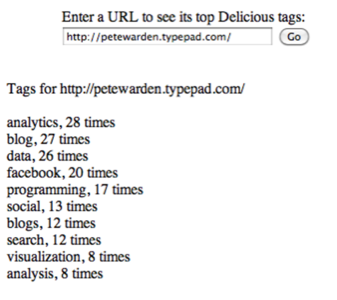

That’s exactly what delicious’s urlinfo API gives you. It returns the top 10 tags for any URL, together with a count of how many times each tag has been used.

You can then use these tags to enrich your own application with a spooky level of intelligence about websites. You could restrict searches to particular categories or industries for example, in a similar way to Blekko’s slashtags, or organize referrer analytics by what kind of site the links are coming from. For most sites the top 10 tags for most sites are both very informative, and highly accurate, so you can get some very effective results.

Using the API is simplicity itself. You don’t even need a key and it supports JSONP callbacks, allowing you to access it even within completely browser-based applications. To demonstrate how to use it I’ve put up some PHP sample code on github, but the short version is you call to http://feeds.delicious.com/v2/json/urlinfo/data?hash= with the MD5 hash of the URL appended, and you get back a JSON string containing the tags. If you want to see how accurate it is, here’s a live version of the code you can play with.