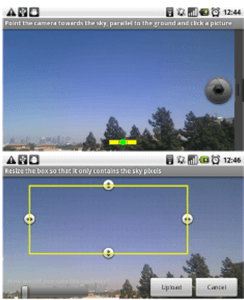

Computer scientists at the University of California’s School of Engineering have launched an Android app called Visibility, which crowd-sources the task of analyzing and measuring air pollution. Using the phone’s camera, Android users simply point their phone at the sky and snap a photo. The app then taps into the device’s GPS and compass to determine the direction and location of the photo and compares the visibility levels in the image with established models of sky luminance. The end result is a crowd-sourced measure of air quality.

According to the scientists, Sameera Poduri, Anupam Tulsyan and Anoop Nimkar, the natural visual range in the Western United States would be 140 miles and in the Eastern U.S., 90 miles. However, today visibility has decreased to 35-90 miles in the west and 15-25 miles in the east. Both natural sources (e.g. sea salt entrainment, wind-blow dust) and manmade sources (exhaust, mining activities, etc.) are to blame.

Although monitoring air pollution is important for public health, current air monitoring stations are sparsely deployed. Often, visibility is measured by human observers, too, which introduces subjectivity into the analysis. And in developing countries, there is typically little to no monitoring done whatsoever.

For these reasons, the scientists decided to develop a sensing system that uses off-the-shelf sensors and could be deployed to a large number of people. They chose to use an HTC G1 running Google’s Android mobile operating system because it provides access to device sensors, can determine 3D orientation and is open and easily programmable.

At the moment, the app only works on days when the sun is shining, not when it’s cloudy out, but that seems to be its only drawback so far.

Democratizing Science

In a larger context, this and other apps which tap into the low-cost sensors and components of today’s smartphones help to democratize access to and participation in scientific discovery. Recently, for example, NASA experimented with Android phones to determine if smartphone hardware could be used in orbit without shaking apart, if phones could work in a vacuum and if they could handle the extreme temperatures of space, both hot and cold.

University of Washington students also used Android to build a slew of mobile apps for the disabled, again thanks to the operating system’s open platform.

But there needs to be more of this type of experimentation, we think. Developers today have access to tools, hardware and sensors for next to nothing, but too many out there seem content with creating dumb, one-off joke applications instead of something truly innovative and unique. Apps are now capable of being eyes and ears for the disabled, satellites, networked sensors, glucose monitors, doctors and so much more. And those built on Android and other open platforms don’t have to go through any sort of vetting process to reach a large audience, as they do on Apple’s platform. Shouldn’t there be more tools like this out there? What are some of the most amazing, innovative apps you’ve seen?