Think of energy hogs in IT, and you’ll probably think of data centers. With their sprawling poured-concrete buildings, massive backup generators and evil-looking Segways, you just know they’re sucking down more power than a small country.

A new study from Australia’s Centre for Energy-Efficient Telecommunications (CEET) highlights another, rather unexpected villain for the latest episode of Captain Planet and the Planeteers: Dr. Wireless and his dastardly 4G minions. Wireless networking, it seems, is the biggest power-waster in the use of cloud computing.

This runs counter to what many observers have been saying. Environmental activist Greenpeace International has criticized cloud computing’s energy use, but they specifically pointed out data centers as the main problem. If the CEET report is correct, then Greenpeace was off the mark.

It’s easy to target the data centers. They’re the low-hanging fruit, because they are centralized and they do pull in a lot of local power. Wireless networks, on the other hand, consume very little power locally, and they are diffused across the entire world.

And the number of networks is growing fast.

“Wireless, local and mobile, is fast becoming the standard access mode for cloud services. Global mobile data traffic overall is currently increasing at 78% per annum and mobile cloud traffic specifically is increasing at 95% per annum,” according to the new CEET whitepaper, all driven by rapid adoption of cloud services tailor made for these mobile devices.

Use of Wi-Fi and 4G LTE networks to access the cloud is exploding, and that’s a problem. According to CEET, a joint effort by Bell Labs and the University of Melbourne, wireless networks are very energy inefficient. It takes a lot of power to deliver good connectivity, it seems. Not to mention the sheer redundancy of so many networks. Even in a small city, I can stand in many places and detect several public and private Wi-Fi networks in range of my device, all blanketed by 4G coverage from multiple phone carriers.

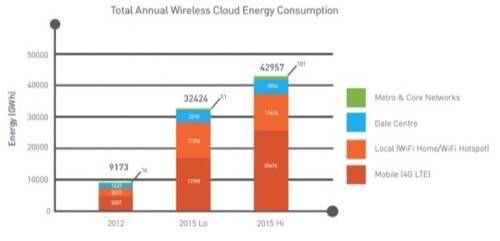

All of these little sips of power can add up to one big gulp. In 2012, total energy consumption of cloud services accessed by wireless networks was around 9.2 terawatt/hours. In 2015, the report estimates, that consumption will be anywhere from 32 to 43 TWh. Of that cloud computing power consumption, data centers only account for about 9% of total use – 90% of the power is used by wireless networking technology. (The actual power use of the devices themselves is negligible, the report indicates.)

In the grand scheme of things, wireless-networking power consumption may not seem like a lot: it’s only 0.03% of the total power use of the planet (based on 2008 figures). But it’s enough to generate 30 megatons of CO2 by the year 2015, which is equal to the amount of emissions from 4.9 million new cars.

There are ways to mitigate this excessive energy use. The adoption of newer equipment would help increase power efficiency, as well as more use of wired connectivity, the report suggests.

Rising technologies like software-defined networks could also come into play: active automated monitoring of network traffic that would add more hardware resources when needed and leave them idle or even off when not required.

Data centers have been the visible target for power wasting for quite some time, and while there’s no harm in trying to increase energy efficiency in these perceived supervillains, the real energy crooks may be the cell tower up the road or the Wi-Fi router sitting on your desk.

Image courtesy of Shutterstock.