This article is part of ReadWrite Future Tech, an annual series where we explore how technologies that will shape our lives in the years and decades to come are grounded in the innovation and research of today.

When you see the world through your own eyes, your brain is able to almost instantly recognize and perceive what things are. Your brain is essentially a complex pattern-matching machine that delivers context and understanding. You see a person, recognize their height, facial features, clothing and voice and your brain says, “Hey, that’s Bob and I know him.”

A computer’s “brain” can’t do this. It can see the same objects in life and may eventually be able to tell you what they are, but it does this by matching a digital representation of the objects it “sees” to similar representations stored in databases, using pre-defined instructions built into its code.

For instance, when Facebook or Google use facial recognition software to determine who the people are in your pictures, the computer runs the images through a database that determines who the person is. It hasn’t learned anything, and if it needed to match the same face in a different photo, it would need to run through its database again.

But what if you could create a computer chip that simulated the neural networking in the human brain? A computer chip that could act, learn and evolve the way a brain does?

Technology companies have tried to make chips that work like the human brain for decades, efforts that have typically fallen well short of the mark. Now Qualcomm and rivals such as IBM and Intel say they’re finally getting close with a new class of brain-inspired computers that are closer to reality—and actual products—than ever before.

Neural Network Effects

The concept has long been a goal for computer scientists. The concept of a neural network and strong artificial intelligence (not necessarily a single chip) was championed by the likes of the U.S. Defense Advanced Research Projects Agency (DARPA) from the 1980s before it pulled funding from its AI projects in 1991. Computer scientists have been working on a self-learning computer for a long time.

Federico Faggin, one of the fathers of the microprocessor, dreamed of a processor that behaves like a neural network for decades. At his company Synaptics, though, attempts to build chips that could “learn” from their inputs the way a brain does never yielded neural processors. Instead, the work led to advances in touch sensing and the creation of laptop touchpads and touchscreens.

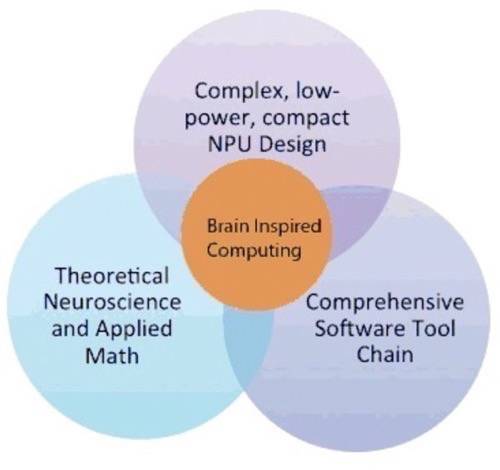

Artificial neural networks turned out to be much more complicated to make than people like Faggin had hoped. Chipmakers like Qualcomm, IBM and Intel are taking what they know about how to build microprocessors and what they know about human brain and creating a whole new class of computer chip: the neural-inspired processing unit.

Basic and advanced research is still taking place for the neuron-inspired computer brains. Qualcomm hopes to ship what it calls a “neural processing unit” by next year; IBM and Intel are on parallel paths. Companies like Brain Corp., a startup backed by Qualcomm, have focused on research and implementation of brain-inspired computing, adding algorithms that aim to mimic the brain’s learning processes to improve computer vision and robotic motor control.

Redefining What Computers Do

Today’s computer chips provide relatively straightforward—and rigidly defined—functions for processing information, storing data, collecting sensor readouts and so forth. Basically, computers do what humans tell them to do, and do it in faster every year.

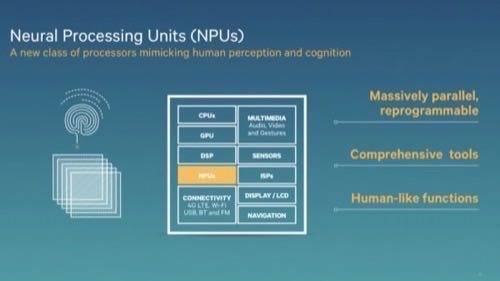

Qualcomm’s “neural processing unit,” or NPU, is designed to work differently from a classic CPU. It’s still a classic computer chip, built on silicon and patterned with transistors, but it has the ability to perform qualitative (as opposed to quantitative) functions.

Here’s how Technology Review described the difference between Qualcomm’s approach and traditional processors:

Today’s computer systems are built with separate units for storing information and processing it sequentially, a design known as the Von Neumann architecture. By contrast, brainlike architectures process information in a distributed, parallel way, modeled after how the neurons and synapses work in a brain….

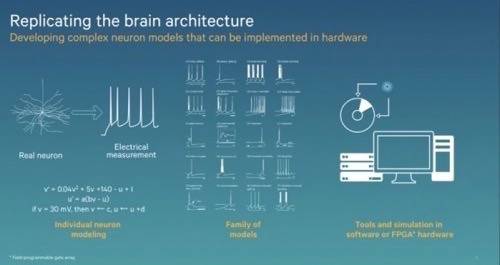

Qualcomm has already developed new software tools that simulate activity in the brain. These networks, which model the way individual neurons convey information through precisely timed spikes, allow developers to write and compile biologically inspired programs. Qualcomm … envisions NPUs that are massively parallel, reprogrammable, and capable of cognitive tasks like classification and prediction.

Matt Grob, the vice president and chief technology officer of Qualcomm Technologies explained the difference between a CPU and an NPU in an interview with ReadWrite during the EmTech MIT Technology Review conference earlier this month.

But all these processors are derived from the original [computer processor] hardware architecture. It’s an architecture where you have data registers and processing units, you grab things from memory and you process it and store the results, and you do that over and over as fast as you can. We’ve added multiple cores and wider channel widths, and cache memory, all these techniques to make the processors faster and faster and faster. But the result is not at all like biology, like a real brain.

We’re talking about new classes of processors. Using new techniques at Qualcomm, that are biologically inspired … So when you try to solve certain problems like image recognition, motor control, or different forms of learning behavior, the conventional approach is problematic. It takes an enormous amount of power or time to really do a task, or maybe you can’t do a task at all.

But what we did do is start an early partnership with Brain Corporation, and we have a good relationship with the founder Eugene Izhikevich, and he created a very good model for the neuron, a mathematical model. The model is two properties, one is that its very biologically plausible.… But it’s also a numerically compact model that you can implement efficiently. So Eugene influenced this space, didn’t create it, but influenced it, and that in concert with the amount of source that we can put down, together, created some excitement about the machines we could build.

Qualcomm calls its theoretical processors “Zeroth.” The name takes its cue from the “Zeroth Law of Robotics” coined by science fiction writer Isaac Asimov as a precursor to his famous “Three Laws of Robotics.” The Zeroth Law states: “A robot may not harm humanity, or, by inaction, allow humanity to come to harm.” Asimov actually introduced the Zeroth Law well after the initial Three Laws to correct flaws in how robots interpreted the original paradigm.

Grob gives the example of a simple robot with an NPU and a leg with a hinge joint. The robot was given a very simple desire: laying down was bad, standing up was good. The robot started wiggling and eventually learned how to stand. If you pushed it back down, it would stand right back up.

“And when you watch it, it doesn’t move like a robot, it moves like an animal. The thing that popped into my mind, it was like how a giraffe tries to stand up after it’s born,” Grob said. “They’re kinda shaky. They want to learn how to stand up, they have that desire, it’s programmed in, but they don’t know how to do it just yet. But they know any movement gets them closer to their goal, so they very quickly learn how to do it. They way they shake and fumble, it looks just like that.”

An NPU based on mathematical modeling and human biology would, theoretically, be able to learn the way a human could. Qualcomm hopes that NPUs could end up in all kinds of devices from smartphones to servers in cloud architecture within the next several years.

Hacking The Learning Computer

Most smartphones and tablets these days are controlled by integrated chips that combine processors, specialized graphics units and communication systems such as cellular technologies like LTE or Wi-Fi. Qualcomm envisions a near-term future where the NPU either slides directly onto these “systems on a chip” or operates as a stand-alone chip within a machine (like a robot).

IBM’s work on cognitive computing—called TrueNorth—attempts to rethink how computing is done and pattern it after the brain. TrueNorth is not a chip like Qualcomm’s Zeroth but rather a whole new paradigm that could change how computer vision, motion detection and pattern matching work. IBM is able to program TrueNorth with what it calls “corelets” that teach the computer a different function.

Intel’s “neuromorphic” chip architecture is based on “spin devices.” Essentially, “Spintronics” takes advantage of the spinning of an electron to perform a variety of computing functions. In a research paper published in 2012 [PDF], Intel researchers describe the capabilities of neuromorphic hardware based on spin devices as able to perform capabilities like; “analog-data-sensing, dataconversion, cognitive-computing, associative memory, programmable-logic and analog and digital signal processing.”

How does Qualcomm’s work differ from that of IBM and Intel? Qualcomm would like to go into production soon and get its NPUs onto integrated chips as early as next year. In a statement to ReadWrite, Qualcomm said, “Our NPU is targeted at embedded applications and will leverage the entire Qualcomm ecosystem. We can integrate our NPU tightly with conventional processors to offer a comprehensive solution in the low power, embedded space.”

When Qualcomm uses the phrase “embedded applications,” it’s referring to actual devices an can control certain aspects of the user interface and experience. That might mean smartphones that can learn through computer vision and audio dictation, for instance, employing code that gives the NPU a desire to perform an act, like learn your voice and habits.

A NPU in a smartphone could perform a whole host of functions. Grob gave us the example of your phone ringing from an unknown number at 9 p.m. on a Sunday night, perhaps while you are watching your favorite HBO special. This is not a desirable time to be speaking with a telemarketer, so you say, “bad phone, bad.” The phone learns that an interruption at the time of day and week is bad and automatically transfer those types of calls to voicemail.

Or maybe you take a picture with your smartphone camera of Bob. You tell your phone (just like you would teach a child) that the image it is seeing is Bob. In the future, the camera would automatically know that is Bob and tag the photograph with his name. Instead of having the device perform pattern matching in the cloud to determine this, the computation is done locally at relatively low computing cost.

Qualcomm wants to be the center of the developer world if and when neural processors become commonplace. Qualcomm’s value proposition is to make hacking an NPU as easy as building an app, via the company’s own tools.

The NPU may ultimately be a pipe dream for computer scientists. Qualcomm, IBM and Intel have all taken steps towards a biologically inspired computer systems that learn on their own, but whether or not it ever actually becomes commonplace in our smartphones, cloud computing servers or robots is not a certainty. At the same time, recent research and development of these kinds of chips is far more advanced that scientists like Faggin could have dreamt 40 years ago.