Two years ago, the U.S. Dept. of Homeland Security firmly decided (again) that a policy of responding to vulnerabilities in the nation’s cybersecurity when they happen, is insufficient. The National Institute of Standards and Technology set about on a plan to model a 21st century perpetual vulnerability mitigation scheme – a continuous monitoring (CM) framework that attempts to model security procedures not in terms of crisis and response, but instead as a perpetual cycle of monitoring and engagement that stays basically the same whether or not there’s a crisis.

In other words, if you “keep doing this all the time,” then whatever happens won’t destroy the network. Late last week, NIST produced its first series of drafts for how government information services could look, perhaps later this decade. It’s so radically different from anything seen thus far, that NIST acknowledges that no one in the commercial sector has even come up with the language to describe it.

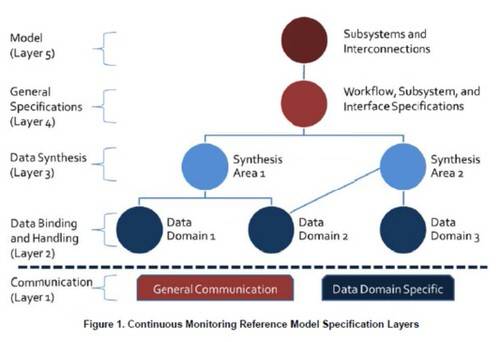

The January draft of NIST’s interface specifications (PDF available here) shows five layers of what are periodically described as subsystems. Think of these functional components as comprised of devices, software, and people. Acknowledging that not every CM process can or should be automated, NIST’s architects have created these five classes of subsystem to represent the divisions of workflow for both people and technology who work with any data domain. In other words, regardless of what data you’re working with, as a government IT worker, you and your programs will fall someplace within this model.

So do software vendors start digesting this system now and try to build products based on it? Right now, NIST acknowledges that might not be possible.

“Each subsystem specification provides product development requirements applicable to specific product types. It is not expected, or desired, that any specific product adopt all of the subsystem specifications. Some of the subsystem specifications describe requirements that already exist within many Information Technology (IT) products. Thus, incorporation of these specifications should require only gentle instrumentation for those existing products. In other cases, the subsystems represent new functionality and product types (e.g., multi-product sensor orchestration and tasking and policy content repositories) that do not currently exist on the market. If vendors choose to adopt these specifications, they will likely need to develop new products. To catalyze vendor involvement we are looking into providing functioning prototypes of these capabilities.”

In a situation that will remind some folks of The Hitchhiker’s Guide to the Galaxy, NIST comes clean in saying that in order to understand how this solution may eventually work, everyone needs to learn along the way just how the problem works. One of the elements absent from the NIST drafts so far is remediation, for instance. Right now, it’s worked out a structural framework for a query system that triggers workflow between the elements of the subsystems shown in the diagram. But the query language itself has not been invented yet.

So are we years away from a working implementation? Perhaps not very many. The CM concept has only been devised in the past few years, and one of the documents that led to the forging of these latest drafts was only produced last September. At that time, the CM concept was being referred to by its broader abbreviation, Information Systems Continuous Monitoring (ISCM).

“The output of a strategically designed and well-managed organization-wide ISCM program can be used to maintain a system’s authorization to operate and keep required system information and data… up to date on an ongoing basis,” the September document explains. “Security management and reporting tools may provide functionality to automate updates to key evidence needed for ongoing authorization decisions. ISCM also facilitates risk-based decision making regarding the ongoing authorization to operate information systems and security authorization for common controls by providing evolving threat activity or vulnerability information on demand. A security control assessment and risk determination process, otherwise static between authorizations, is thus transformed into a dynamic process that supports timely risk response actions and cost-effective, ongoing authorizations. Continuous monitoring of threats, vulnerabilities, and security control effectiveness provides situational awareness for risk-based support of ongoing authorization decisions. An appropriately designed ISCM strategy and program supports ongoing authorization of type authorizations, as well as single, joint, and leveraged authorizations.”

The hope is that, once security vulnerabilities are identified by researchers, either in the public or private sectors, the standardization of their reporting will enable them to be entered into the system like marbles in a pachinko machine. The system will essentially digest them, feeding on them and integrating their lessons into everyday processes. It is a completely different way to think about work and workflow, but desperate times demand it.