As the recovery from Hurricane Sandy moves into its next phases, companies and organizations that depend on the Internet are beginning to ask the hard questions about the effects of the superstorm. Outfits directly affected are wondering how to keep from getting hammered again, while others around the world are looking to make sure they’re not vulnerable to these kinds of disasters.

The obvious answer is a cloud-based deployment for websites and services that takes full advantage of the cloud’s elasticity and redundancies. But that’s been true for a while. So what’s the hold up?

The Problem Is Clear

The images and stories that came out of Lower Manhattan put the problem in sharp relief, to paraphrase New York City Mayor Michael Bloomberg: Locating so many Internet service providers in one geographic area put a lot of Internet resources in serious risk. It was a gamble that didn’t pay off for many data center operators and their online customers, thanks to flooding that knocked out power – sometimes along with the backup generators and fuel supply pumps.

By Saturday, things were getting back to normal, as larger Internet providers like Equinix and Internap were operating either on utility power or stable generator power. But this wasn’t a uniform recovery – Datagram, which hosts prominent websites like Huffington Post, Gawker and BuzzFeed, lost the basement generators at its 33 Whitehall Street facility and did not receive a new backup generator until 2pm Friday.

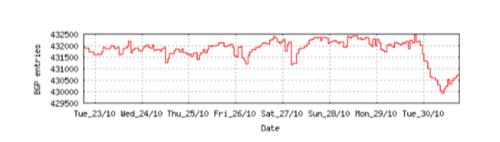

The full impact of the storm is still being measured, as recovery operations continue. But to give an idea of how may sites were dropped off the Internet after Sandy made landfall, take a look at the chart below of IP addresses from the Asia Pacific Network Information Centre. Immediately after Sandy’s landfall a week ago, the number of announced systems dropped by 400, which cut off more than 2,000 separate sites on the Internet using unique IP addresses.

The efforts to keep or restore those data centers’ connectivity to the Internet are tales of can-do, sleeve-rolling, American hard work and ingenuity – and they should be applauded for making the best of a bad situation.

At the same time, as the tech community gawks at pictures of bucket brigades of petrochemicals and sea-water flooded basement, its obvious that this is not the best solution. The time for excuses justifying these kinds of heroic, yet ultimately unnecessary last-minute saves is over.

When The Train Comes, Move

The Herculean efforts of the data centers whacked by the storm bring to mind another mythical character, King Sisyphus, the rather wicked soul condemned to forever roll a boulder up a hill, only to have it roll back down just before it reaches the top. And while the affected ISPs, websites and their customers may not be wicked, they are still facing a Sisyphean situation if they go back to the way things were.

The fact is, if this storm didn’t wake companies up to the importance of using off-site public clouds as at least a backup for their online presence, then one has to wonder if what it would take to jog the IT staff into taking action. Tthat’s true for companies in Lower Manhattan – and everywhere else. Your company may be located in an area prone to hurricanes, earthquakes, or tornadoes – or you could be victimized by human error or a malicious hack or even physical attack. The plain truth is that no company is truly safe from the possibility of disaster – so you have to be prepared to get the hell out of the way when trouble comes, and to have a way to get back on your feet if you do get knocked down.

The key to doing that quickly and efficiently is to remember that your website is on a network – it does not have to be tied down to one vulnerable server in one vulnerable building.

Cloud Costs Coming Down

It’s one thing to say “just get your site on the cloud” and quite another to make that happen. Sites have to be architected in such a way they can be migrated reasonably. Regulatory compliance may also play a big factor for certain industries. Plus there’s the cost of replicating data across multiple locations.

But that cost is not as much of issue as it used to be, according to Robert Offley, CEO of managed service provider CentriLogic. Five years ago, the cost of replicating a site was “six to eight times higher than now,” Offley explained. Today site replication is a far more cost-effective option.

Simple site replication is just one way to take advantage of the cloud. There’s a full spectrum of cloud-based solutions, anything from backing up your sites’ database in an off-site facility to full-on multisite cloud architecture for a website that can adjust smoothly from server to server, based on regional network and data center outages. There’s a whole host of options out there that aren’t that expensive – not when compared to the loss of traffic and revenue that will hit your business when things do go wrong.

What Are You Waiting For?

Amazingly, it’s still a lesson that needs to be taught. As the events after Sandy show, there are a whole lot of businesses, including tech-savvy organizations who should really know better, that haven’t taken the time and effort to put active backups in place – much less to move everything into the cloud.

If your business is a storefront, you can’t just pick up and move when disaster strikes. But if your business is on the Internet, you most certainly can move your bits when the time comes – or better yet right now.

Not taking advantage of the very thing that makes cloud so valuable, the capability to keep your site up and running no matter what happens in any single location, is a waste of the available technology and very short-sighted… to the point of stupidity.

Objections will be raised to this argument, of course, chiefly that clouds aren’t perfect solutions, either. First off, cloud facilities can be whacked like any other data center, as Amazon and its customers have found out quite a bit this year. Nor do clouds offer duplication and high-availability services out of the box, as it were. Customers, namely Web admins and developers, have to build their sites to take full advantage of the cloud, or otherwise it’s just another server sitting in the building somewhere waiting to get knocked offline.

But when Web teams plan for success in the cloud, elastic services offer a better 21st Century method to avoid or respond to disasters. Certainly a lot better than waiting for a bucket brigade.

Lead image courtesy of Shutterstock.