When mobile users feel they don’t like how their apps perform after the first trial, some 75% of them won’t launch the app again. That’s the metric cited by engineers and marketers at HP Software, who note that this first wave of mobile apps brought forth by the iPhone has resulted in a glut of programs that make even the best performing mobile hardware into a pocket full of silicon cement.

This morning, HP begins a repurposing of the performance testing tools for websites that it gained through the Mercury Interactive acquisition of 2006, for the mobile apps era. It’s unveiling what it calls “LoadRunner-in-the-Cloud,” complete with hyphens. It will act as an off-premise testing platform for mobile apps that are deployed as services, simulating the activity generated by thousands of users simultaneously to gauge the resilience of servers and resources. This way, you might not have to disappoint three-fourths of them to learn how well your service holds up.

Better Scalability Through Experimentation

“The application architecture itself is the performance bottleneck nine out of ten times,” says Matt Morgan, HP Software’s global senior director of solutions marketing, in an interview with RWW. “By monitoring these services and knowing how long a transaction spends waiting for a data retrieval or a logic process, or some other storage function to occur, you can pinpoint the modules inside the service that have the most potential to slow an application under load. You can find out which services are not scaling.”

When applications are deployed on PaaS platforms such as Heroku and Windows Azure, Morgan says, a great deal of the complexity of how the software interfaces with the hardware is abstracted into obscurity. The architectural concept that I dubbed composite applications in 1991 has expanded to a seemingly unmanageable number of tiers. With the shift to mobile architecture, much of the burden of providing performance has shifted off of the front-end client, and onto the server. And in-between those two tiers are any number of platforms. “So this idea that the software is a composition, gets even more complicated,” he remarks.

“We correlate the front-end story to the back-end problem. And if you think about just the complexities of performance monitoring, if you don’t do correlation, you can end up with an enormous collection of logs and metrics that don’t actually mean anything to the tester,” he continues. “The tester really cares about, how many users can the system support, and what will these users experience when they do concurrently hit their system?”

Mobile apps typically break at some point, and Morgan notes, they don’t bend very much before they do. Maybe an app performs well with 300 simultaneous users, and then fails completely at 325. So LoadRunner-in-the-Cloud (hereafter LRC) finds the breaking point, which is typically somewhere. Once that’s done, it relies upon feedback provided by a vast network of back-end monitors, probing such factors as SQL queries, server metrics, and diagnoses of the method calls being invoked inside the service. “By correlating how much time it takes for a transaction to hit these things, you can actually attain a pretty clear picture, and start to show that the areas of your application that are causing problems have a distinct performance impact.”

The protocol HP uses for emulating user activity in AJAX Web applications, called TruClient, is explained in this video. TruClient has been extended for LoadRunner-in-the-Cloud.

Identifying Your App by How and Where It Breaks

The result is a kind of “stress footprint” that characterizes the resilience state of your mobile app. The space in which this footprint appears is the scenario, which is LRC’s term for a repeatable test. Each test helps establish a firm baseline, which is then replicated identically for different problem sets – different numbers of users. This way you’re testing how the same code scales up, including with each increment – LRC adds test users on an incremental scale, not logarithmic. “You’re trying to determine, if the exact same actions take place on the server, do things improve with the change?” says the HP senior director. “Scenarios allow you to digitize that load, creating a one-click re-execution capability, which is very important in an iterative world. You run your load test, you identify your problem, you make your change, and you go back to your scenario and run the exact same test.”

Overlaying the results gives you your best metric as to performance, which in a cloud-based scenario is indeed capable of improving with incrementally added users.

Results from previous load tests, including with earlier builds of your apps, are recorded as snapshots. New test results can be overlaid atop these earlier ones, in order to determine what code changes made the biggest impact. “We give you the capability to leverage that information inside of an operational monitoring solution, but if you wanted to monitor a Web or mobile app going forward for functionality, and you want to have visibility to the way it should run, you can use the metrics from LoadRunner to compare against what it’s actually doing in production. That gives you the snapshot of the lab world, where everything works, to the production world where everything’s real.”

LoadRunner-in-the-Cloud is being offered now to HP partners in the U.S. and Canada, and will be rolled out through OEM partners on their own timetables. Pricing will be determined by the party making the sale.

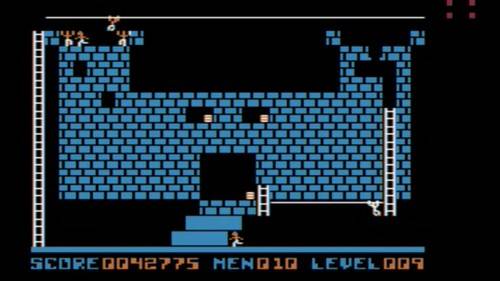

No, no, not that Lode Runner! Somebody get our graphics department off the Apple II and replace this!