If you are trying to implement a content caching solution on your enterprise network, you know that serving up dynamic content is a cat-and-mouse game. The major Web content delivery sites want to deliver fresh content, and you want to try to cache that content, so that subsequent views of it don’t consume additional network bandwidth on your Internet links.

But what you probably didn’t know is how often these sites change their delivery mechanisms, making it hard for any caching to be effective.

In the olden days of the static Web, we didn’t have to worry about this. Once a static page was cached, that was the end of the story. We could get this page delivered from our cache and we were done. But as our network users request more dynamic content, caching gets more complex and a harder problem to solve.

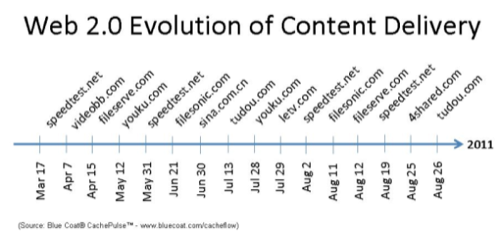

In research released from Blue Coat Networks last week, we can see exactly how often which websites have changed their algorithms. Blue Coat sells its Cacheflow Web caching appliance, and part of the service included in their box is a cloud-based update service called CachePulse that tries to stay on top of what the major content delivery sites are doing. They just announced a new version of the appliance to provide better performance and for larger networks here.

As you can see from this timeline, some websites update their algorithms regularly, like speedtest.net and fileserve.com. This means that caching methods that worked previously with these sites have to be adjusted to handle the new configurations, if you are going to get any wide-area bandwidth savings.

Blue Coat looked at more than 11 petabytes of content that they cached in August and found a third were video files, a third were file sharing and software update sites and 2.5% were social networking related. Facebook and Netflix are the largest sources of video content. They also found that they could save almost half of their customer’s WAN bandwidth with their appliances, and that most websites are still quite cachable.

So the moral of the story is clear: if you are going to cache your content, know what you are doing and make sure you use a vendor that will update its algorithms often to keep up with the changes from the content delivery site operators.

NOTE: I have done some custom consulting work for Blue Coat over the past several years.