“Everything we call real is made up of things that cannot be regarded as real” – Niels Bohr

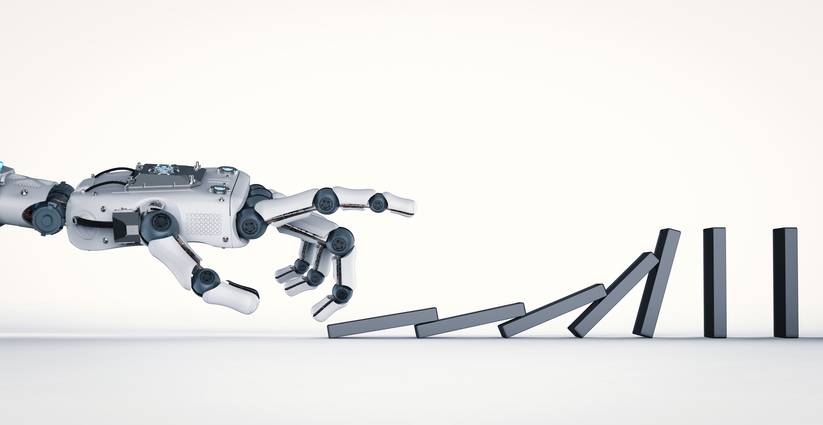

For many, the “virtual world” of AI and the “real world” in which we live seem to perpetually be at odds. With AI largely being academic or theoretical in nature for most of the past 60 years, it’s easy to see why many question the potential of it being truly useful in our “real world.” But, in reality, those two seemingly different worlds are not actually worlds apart — AI’s virtual world has real world applications.

See also: 4 ways AI is enabling the IoT revolution

Let’s challenge this premise of separate worlds with an experiment. Close your eyes and picture your favorite person. Imagine that person speaking your name and touching your face. Your mind is able to intelligently enable you to see that person, hear that person and feel that person, in your head. Now close your eyes and imagine adding 131 + 17.

Notice how the mind visually presents those numbers to you, and enables you to think through the problem in your own voice? These things are not occurring in the physical world. The non-physical world where our mental activities occur is similar to the virtual world of AI.

Don’t miss your chance to attend our IoT Revolution Symposium

The moment we open our eyes after this exercise, it’s easy to look through them and hold onto the traditional Newtonian views that the foundation of our universe is defined by physical material reality. This is because our five keen senses (what we see, smell, touch, hear and taste) do such a great job in connecting our conscious, subconscious, and unconscious mind with the physical world that the non-physical and the physical world appear to be one.

Without any senses, some argue that our minds would be incapable of connecting to the physical world at all, let alone converging both. This is the same argument used to explain AI’s inability to connect in a meaningful way to our physical world. That said, just like our own human consciousness, AI can rely on senses similar to humans in order to connect their thought processes to our physical world.

IoT makes AI come to life

The Internet of Things (IoT) provides ready access to sensors that allow more meaningful sensory access to our physical world, thus enabling AI to “come to life.” Camera sensors give AI “eyes” to see the world. Microphones give them a “good ear.” Accelerometers and gyroscopes give them a “good feel for things.” Particulate and chemical sensors give them “a good nose.” In many ways, these sensors can empower the AI with “superhuman” capabilities, like:

- Access to many more sensory inputs than we have as people, in many different places, simultaneously.

- Infrared “eyes” so that AI can “see” heat variances, as well as standard object detection, counting, and classification.

- Ultrasonic “hearing” that enable access to frequency ranges beyond the human spectrum.

Accelerometers that capture haptic movements with much finer details than our own fingertips.

AI empowers the perception and meaning of these IoT-driven sensory inputs. Effectively, the IoT sensor measures and indicates the physical data property, and the AI is the intellect that allows perception of what the physical data represents. This is what allows sensors to evolve into senses.

These factors, as well as the broad availability of low-cost distributed, compute capabilities (the cloud), the open source software movement, machine learning advancements, and mobile driven advancing microelectronic (ARM) capabilities present many of the dots to connect to finally make AI a reality.

After so many years being academic in nature, it’s about time for the AI Revolution.

The author is founder and CEO of LitBit. He recently joined us at an AI and IoT thought leadership panel, and we’ll be having more of those kinds of insights at our upcoming IoT Revolution Symposium, Tuesday, July 11, in San Francisco.