The collision of the wine websites CellarTracker and Snooth raises some interesting questions over data ownership. Snooth was accused of copying information from CellarTracker’s user reviews, using an automated robot script crawling the site. While most commenters were outraged, it’s not clear that there’s any legal case against Snooth, even if it had crawled the data. As it turns out, the problem came from an outdated input feed, rather than its crawler, but the case highlights how many problems will arise as data flows and mixes on the Web.

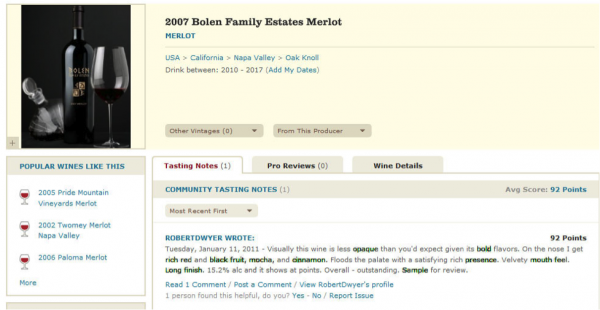

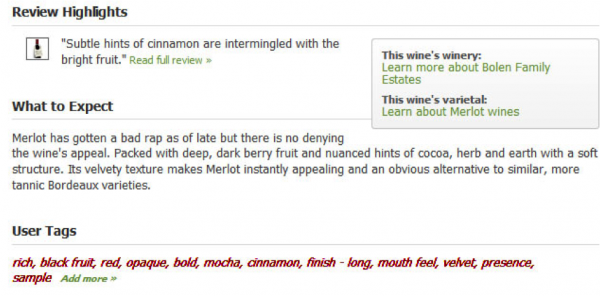

The controversy erupted when an independent wine blog at vintank noticed a spooky correlation between the user tags that appeared on the Snooth site and those present in reviews for the same wine on CellarTracker. For example, these screenshots show the same tags appearing on the two sites, even unusual ones like “mocha” or “presence”:

How could a competing site possibly get access to this information? CellarTracker’s robots.txt file (which lays out its policies for automated access to the site) is pretty open, and no log-in is required to see the reviews.

This means that Google and other search engines can analyze those pages and send traffic to the site. They may need to do some fairly sophisticated processing to understand what the page is all about, with the results placed in a database, but since CellarTracker benefits from the extra traffic they’re unlikely to complain about their data being copied. In fact, there’s a fair body of case law that suggests by opening up your robots.txt, you’re giving permission for copies to be made and shown to users.

That system works well as long as search engines are the only ones doing the scraping. What’s changed over the last few years is that you can now crawl millions of Web pages for just a few dollars. Many startups have sprungup that make this sort of operation easy even if you don’t have the expertise in-house. These small-scale operations often don’t drive traffic to the source of the information though, so the implicit bargain of that publishers have with search engines doesn’t hold.

One thing site owners can do (and that CellarTracker did for Snooth’s crawler) is prohibit individual user agents in the robots.txt. The trouble with this blacklisting is that you can only block them after you find out about them, and that may be after they’ve already grabbed the data. A better alternative from the publisher’s point of view is to whitelist only the search engines you actually care about, as Facebook did in response to my own crawling. This is a bad thing for the open Web though, since new startups are denied access to the sites, and the dominance of the existing search engine players is cemented.

What’s clear from this case is that the rules of the Web have departed from people’s intuitive sense of right and wrong. As Snooth’s CEO points out, the user tags at the center of the controversy were “not reviews or information that is, or could be, copyright-protected.”

The copyright law around data requires some highly-paid lawyers to fully explain, but the gist is that plain information without any creative contribution from the author is tough to protect. He also highlights the problem of dealing with information from other 50 million Web pages, plus many other data feeds. Once the data is entered into a system like that, there’s rarely any mechanism to keep track of its source, or apply any special restrictions on its use.

Put all these issues together, and you have a world of proliferating scrapers, pouring unprotected data into systems that mix and match the streams promiscuously, producing an end result that may compete against the sources themselves. It’s a place where Snooth could copy those tags, with only user outrage to hold them back. What do you think the rules should be?

Photo by ciccioetneo