Ten years ago Unix claimed five the top-10 fastest computers on the planet and 44% of the overall supercomputer market. Today? Unix, the once indomitable performance powerhouse, doesn’t make the top-10 list of the world’s fastest computers. Heck, it can’t even crack the top 50. Not since Linux took over, that is.

Buried in these sobering statistics on the rise of Linux and the fall of Unix is a reminder to proprietary infrastructure software vendors that hope to compete with open source: you can’t win. Not when the community gets involved.

Community: The Hidden Performance Booster

This was not always obvious. Back in 1999 Sun Microsystems CEO Scott McNealy, whose company made expensive but high-performance Unix servers, went out of his way to browbeat Linux performance, arguing:

Linux is like Windows: it’s too fat for the client, for the appliance … it’s not scalable for the server … Now why in the world would anybody ever write another cheque to Microsoft? I don’t know. But why would you do Linux either? That’s the wrong answer.

McNealy could be forgiven for his myopia. After all, in 1999 Linux couldn’t crack the top-10 of the venerable Top 500 list and, truth be told, Sun’s Solaris did offer far better performance than Linux.

What neither Sun nor its Solaris could match was the Linux community.

Sun could take pride in its exceptional history of engineering innovation. But no matter how stellar any one company may be, it is only one company. No single entity can hire the breadth and depth of engineering talent necessary to host an industry standard infrastructure platform today.

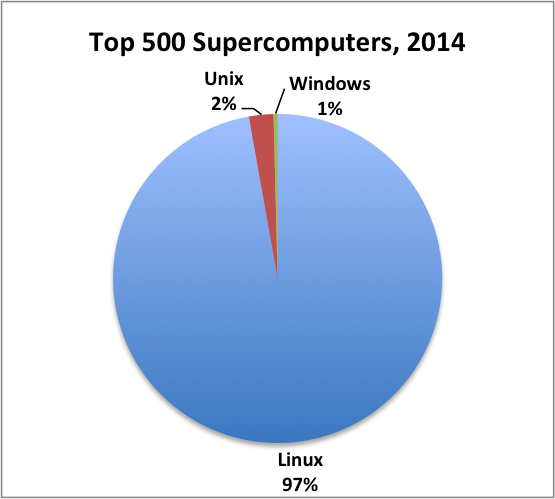

And today Linux absolutely dominates the list of the world’s top-500 fastest computers. A staggering 97% of the world’s fastest supercomputers are powered by Linux, with just 2% powered by Unix (and none by Sun’s Solaris). McNealy, running benchmarks against Linux, could never have prophesied such a dramatic reversal of his company’s fortunes.

After all, performance benchmarks are an arbitrary way to measure performance in a way that never or rarely happens in the real world. In the world we actually live in, community benchmarks are far more influential.

Benchmarking Community

Mark Callaghan, Facebook’s MySQL database guru, recently griped about how misleading benchmarks (or, as he calls it, “benchmarketing”) can be in the context of database performance. But in so doing, he also calls out the need for context when evaluating different systems:

Benchmarketing is a common activity for many [database] products whether they are closed or open source …The goal for benchmarketing is to show that A is better than B. Either by accident or on purpose good benchmarketing results focus on the message A is better than B rather than A is better than B in this context. Note that the context can be critical and includes the hardware, workload, whether both systems were properly configured and some attempt to explain why one system was faster.

For an open-source operating system like Linux, it’s impossible to evaluate the code without the accompanying context of its community. For McNealy in 1999, all he could see was the “speeds and feeds” benchmarking that favored Solaris over Linux. But by looking at the trees he missed the forest: the rapidly growing Linux community.

A vibrant community offers an open-source project a number of advantages:

- Community makes it cool to adopt an open-source project

- Community makes it safe to adopt an open-source project

- Community creates a larger total addressable market

- Community makes it hard for rivals to compete

For #2 above, safety is found in numbers. Not only does a thriving community reduce the risk that relying on a given body of code will end in tears, but it also ensures that there is a growing wealth of online materials (e.g., best practices, Stack Overflow questions, GitHub, etc.) and a deep talent pool to make adoption easier. This, in turn, leads to a growing ecosystem of third-party add-ons, which generate network effects for that project. It is a self-perpetuating cycle that is difficult for one company to master or maintain versus a dedicated and distributed community.

Performance, it turns out, is a relatively easy problem to solve once a project has enough community momentum. For Linux, performance went into overdrive once massive commercial interests joined the the party, including Red Hat, IBM, HP, Oracle and, ironically, Sun. Each contributor had its own reason for contributing to Linux; each worked to improve Linux in different ways. Over time, this translated into not only better overall functionality but also better performance.

The Big Community Bets Of 2014

This is why I remain optimistic about open-source projects like Hadoop and OpenStack, among others. Each has a ways to go, performance and feature-wise, just as Linux did 10 years ago. But because each commands serious community interest, performance and functionality bottlenecks will dissipate over time.

That said, sometimes one can have too much of a good thing. OpenStack is one such example. The cloud community has been tripping all over itself on the way to market, which calls out the need for more leadership within the OpenStack community. Assuming OpenStack can find that leadership, it already has all of the other elements of a vibrant community to keep it going.