Not surprisingly, IBM execs believe they will soon be selling servers and supercomputers that process data much faster than today’s technology allows. But they’re talking about much, much faster.

Rather than use electricity to move data between processors, IBM is preparing to use light, giving high-end customers enough power to keep pace with the growing information flowing through data centers.

IBM’s Tiny Milestone

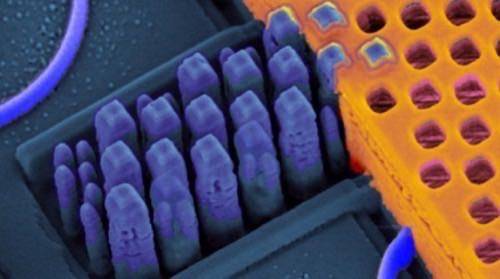

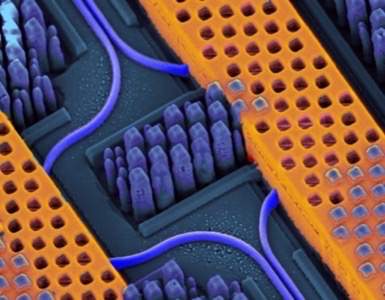

After more than a decade of research, IBM scientists have put nanophotonics technology onto 90 nanometers of silicon and integrate it with ordinary microprocessors.

That last part is critical. If IBM can integrate the new optical technology with ordinary processors, it avoids a layer of manufacturing expense and complexity, and it can make the new systems in conventional factories.

IBM is attacking a bottleneck in data centers and supercomputers. Moving data as electrical impulses – even over the comparatively small distances between chips – chokes off performance.

Solving the problem now means adding more processors, which raises costs.

Practical Nanophotonics

A proof-of-concept just two years ago, IBM’s nanophotonics system is a transceiver that receives electrical impulses from one chip, converts them to staccato light signals, and transmits those to another transceiver sitting alongside another processor. The receiving transceiver reverses the process.

Even with the conversions, the process creates a much higher chip-to-chip data flow – 25 gigabits per second per channel -and can also be used for chip-to-memory communications, another common bottleneck.

Just as with the far bigger fiber-optic systems used for global telecommunications, each beam of light can carry multiple data streams, each on a different wavelength of light. While not a feature on the current proposal, this would make it possible to send terabytes around otherwise conventional electronics with almost literally blinding speed.

“The technology is very universal, very flexible and versatile,” Solomon Assefa, a nanophotonics scientist for IBM Research, said.

Short, Sharp Signals In Data Centers

IBM is preparing to put nanophotonics in servers, data centers and supercomputers, Assefa said. The technology is also expected to play an important role in exa-scale computing, a benchmark expected to be reached before the end of the decade. Today’s supercomputers are measured in petaflops, equal to 1 quadrillion calculations per second. An exaflop is 1,000 times faster.

There will be millions of transceivers needed for an exa-scale system, he said.

Martin Reynolds, an analyst for Gartner, said IBM’s technology appeared flexible, but noted that it’s destined to remain in high-end systems for a while.

“The device has to have optical power [i.e. light] fed to it, as there is currently no practical way to generate light on the silicon,” Reynolds said. “This demand requires sophisticated and expensive packaging techniques that, for now, constrain these devices to high-end systems.”

So it will be some time before nanophotonics make its way into laptops or tablets. In the meantime, IBM hopes to beat competitors by getting it into data centers and supercomputers first.