If you look at the evolution of capturing the world around us, we keep getting closer and closer to what the world actually looks like. We started replicating nature using painting and sculpting, caves and stones were our canvas. Photography was invented about 200 years ago. It took another 40 years before color photography. We got cinematography a few years after that. But we live in a 3D world, and the next big phase of capture is volumetric.

The evolution of capture. pic.twitter.com/59g2tWJZxT

— alban denoyel (@albn) August 22, 2016

3D capture has been possible for a while thanks to techniques like photogrammetry, the process of stitching together 2D images of a subject from different angles. Photogrammetry is great, but it requires advanced software, skills, and complex workflows.

The next phase of 3D capture is real-time, meaning there is no stitching involved, and you can capture a volume “on the fly”. This evolution is quietly happening right now. There is not a single phone manufacturer who is not secretly working on adding 3D capture to their devices. The most visible example today is Tango, an effort by Google to bring real-time 3D capture to mobile devices. The project started in 2012, and they released the first tango-powered consumer phone last summer, the Lenovo Phab2Pro.

Another noteworthy example is Apple. They added a second camera to the iPhone 7+. Right now it’s only used for better portraits with depth of field, but the keynote clearly demonstrates the capability for 3D capture, since the optics are able of outputting a depth map.

Depth map generated with an iphone7+

Beyond the iPhone 7+ release, it’s worth remembering that Apple acquired PrimeSense – the company behind the Kinect – in 2013. The kinect was one of the first pieces of hardware able to capture real-time 3D, using infrared projection for depth mapping. It’s also worth noting that Microsoft recently announced 3D capture capacities on Windows 10 mobile devices, although they are relying on a photogrammetry-based approach.

The next phase in capture is undoubtedly going to be animated capture, also called volumetric video, offering a free viewpoint in a video scene. Various teams are working on this, most notably Microsoft and 8i. There are several options to make your own volumetric videos, including software like Mimesys, with an example below.

4D video, my son walking:

This is the closest we have gotten to capturing the world as it is, in its full 3D glory. But most importantly, this trend is going to dramatically impact the VR and AR ecosystem and growth. While most of the VR content today is simply 360-degree photos or videos – it is the low hanging fruit in terms of capture because it relies on 2D formats that we already know – the industry agrees that the future of VR and AR is volumetric, offering six degrees of freedom.

See also: What are the unique needs of public VR?

I firmly believe that VR will take off thanks to user-generated content when people are able to capture volumetric scenes that matter to them: their family, their food, their things, holiday places. They’ll want to view those in VR, which offers the natural way of consuming a 3D scene.

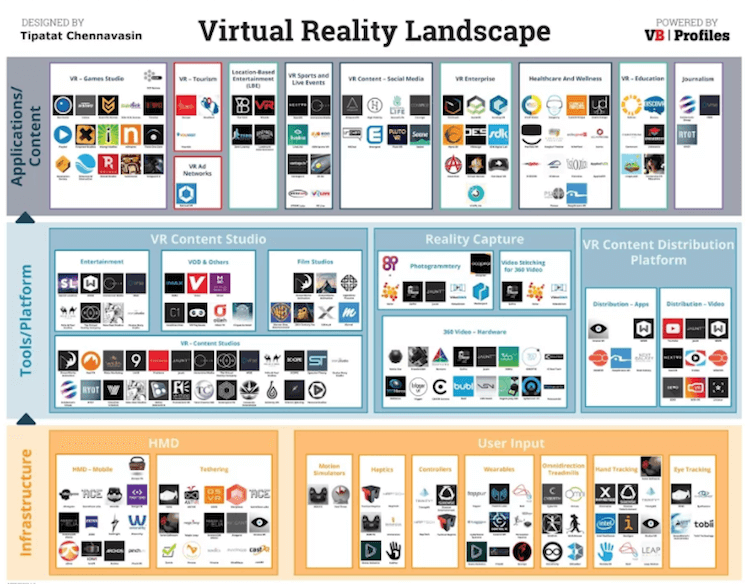

This article is part of our Virtual Reality series. You can download a high-resolution version of the landscape featuring 431 companies here.