Some of the technology we now use on a daily basis would seem unreasonably futuristic to someone living 20 years ago. IoT devices are becoming plentiful, with almost any electronic device or appliance now offering an internet connection and a host of onboard features, and the average person can access practically all the world’s information with a miniature computer that fits in their pocket.

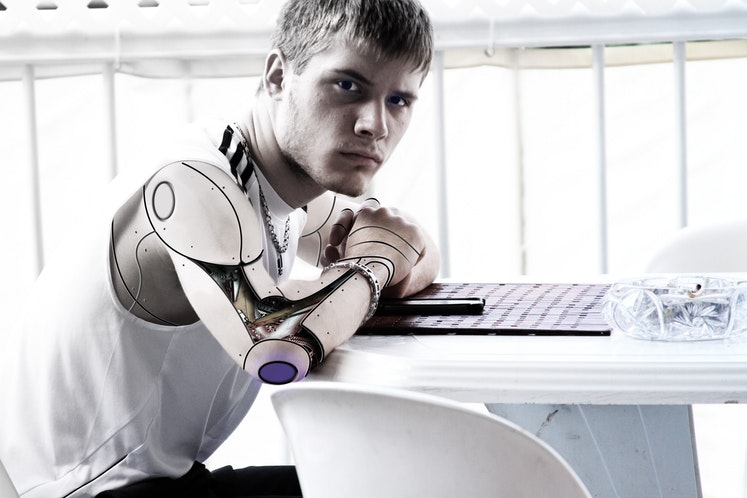

When you think about that impressive cycle of technological development, it’s not hard to imagine a future where cyborgs—human/machine hybrids previously exclusive to the realm of science fiction—walk among us. But what if those cyborgs are already here?

What Is a Cyborg?

Let’s start by defining what we mean when we use the term “cyborg.” Different people will use the term in different contexts, but in general, we use the term to describe a being that uses both organic and technological systems to operate. The name itself is a portmanteau of “cybernetic” and “organism.”

Depictions of cyborgs in pop culture usually have telltale signs indicating their nature; for example, the Borgs in Star Trek are shown with wires sprouting from their bodies and electronics embedded within their bodies, and the DC comics superhero Cyborg has a body made mostly of metal. However, a cyborg need not be so obvious. If we can agree the term “cyborg” applies to any organic being that relies at least partially on technological components, the relationship doesn’t need to be 50/50, nor does it need to be visually obvious. Instead, almost any instance of a human being relying on some kind of technology consistently could be described as cyborg-like.

The Case for Modern Cyborgs

Why would someone argue that today’s humans are cyborgs, even though most of us look nothing like our sci-fi counterparts?

It comes down to how we use our technology. Imagine a hypothetical scenario where you have a computer embedded in your brain. This computer has access to the internet and can give you the answer to any question answerable on the web, all internally. Just by thinking it, you can look up the name of an actor you remember from an old movie, or refresh your memory on the lyrics to your favorite song. Because you’re accessing knowledge that exists outside your brain, and you’re relying on an embedded technological construct, most people would consider this an example of a cyborg.

But here’s the thing—we’re practically already doing this. Most of us have a smartphone on us at all times, and if we have a question that needs answered, we automatically begin entering it into a search engine, or if we’re home, we’ll simply ask the smart speaker we have conveniently nearby. What’s the difference between our dependency on technology being external or internal? If the interface is somehow internal and subjective, existing only in our minds, is that somehow fundamentally different than having a device at our fingertips?

Here’s another example to consider. Imagine you have an LED screen embedded in your arm. It gives you a heads-up display (HUD) that helps you understand your current surroundings, and can even help you navigate to your next destination. Most people would also consider this a cyborg-like upgrade—yet wouldn’t consider constantly relying on a GPS device to be a cyborg-like upgrade. Both scenarios offer human beings the same improved access to information, both are optional, and both are constantly available.

Add to that the rising trend of technology as a kind of fashionable accessory. Metallic enhancements like grillz are becoming more commonplace, and wearable tech like smart watches are seeing sales in record numbers. People are slowing starting to integrate tech with their own bodies, rather than simply carrying it around with them (which would have been more than enough to qualify us as cyborgs).

Then again, most of us have an intuitive sense for what “counts” as part of us and what doesn’t. We count our hands and feet as part of our own bodies, and our own identity, but we don’t count the tablet because that exists outside of us. One could argue that until the technology is impossible to remove (such as a surgically implanted device), or otherwise overcomes this intuitive hurdle, we shouldn’t consider ourselves to be cyborgs.

Perhaps more importantly, why does this debate matter in the first place? We rely on technology to go about our daily lives regardless of whether you call us cyborgs or not, so what impact could this discussion possibly have?

Ethics

Determining whether or not we’re cyborgs and evaluating what it means to be a cyborg is important for setting ethical and legal standards for the next generation. For example, right now, consumers and political groups are becoming increasingly aware of how their data is being used, and are fighting for more transparency from the companies collecting and using these data. Corporate leaders argue that their products and services are purely optional, and if customers aren’t willing to give up their personal data, they can choose not to use those services. But if we’re considered cyborgs, it means technology is a fundamental part of us—and a practical necessity for living in the modern world. At that point, a cyborg would have less of a choice than a typical human being in which tech services they use, and would, therefore, need greater protections.

It’s also important to consider the distinctions between cyborgs and conventional human beings now, while the technology is still in its infancy. Once we start developing cybernetic limbs that are more powerful than human limbs, we’re going to face much tougher questions. Should enhanced individuals be allowed to participate in the Olympic games? Should they be given restrictions on how to use those enhanced limbs? Should they be offered greater protections? There aren’t any clear answers to these questions, but that’s the point. Considering precise definitions and ethical dilemmas isn’t going to help us once we’re deep into a new era; the time is now to start ironing out these problems and developing new tech responsibly.

Acceptance

It’s also important to start easing people into the idea of being a cyborg. Intuitively, the majority of the population would probably agree that becoming a cyborg would be “creepy” or strange. They don’t like the idea of giving up any part of their identity—especially if that part makes them uniquely human. They might resist installing a brain-computer interface (BCI) based on the idea that they want their mind to be independent and wholly organic.

This, by itself, isn’t necessarily a problem, but it could lead to technological stagnation, or widened gaps among the population. For example, if 10 percent of the population gains access to a BCI that multiplies their cognitive potential many times over, it wouldn’t take long for them to outproduce, out-earn, and otherwise dominate their technologically lagging contemporaries. Warming people up to the idea that they’re already cyborgs—and that newer enhancements wouldn’t compromise their sense of self and identity any more than existing devices and technology—could help decrease this gap, and help us roll out important new technologies faster.

On some level, the argument is pedantic. The term “cyborg” doesn’t and possibly can’t have a formal, precise definition since there’s such a gray area in how we use technology. But we’re developing a world that’s about to be defined by technology, and if we can’t accurately assess and define our relationship with that technology, we’re never going to be able to harness it properly, let alone use it responsibly.

Regardless of how you feel, there’s enough of an argument that humans are already cyborgs that technologists are already adopting the position—and that alone warrants a closer look, and an open mind to the possibilities.