Written by Can Erten and edited by Richard MacManus. This is the first in a 2-part series on Read/WriteWeb, exploring the world of P2P on the Web. Part 1 (this post) is a general introduction to P2P, along with some real-world applications of P2P. Part 2 will discuss future applications.

As the connection speed of the internet has increased, the demand for web related services has also increased. After the Web revolution, peer-to-peer networks evolved and currently have a number of different usages – instant messaging, file sharing, etc. Some other revolutionary ideas are still in research. People want to use peer-to-peer in many different applications including e-commerce, education, collaborative work, search, file storage, high performance computing. In this series of posts, we will look at different peer-to-peer ideas and applications.

Introduction

Peer-to-Peer (P2P) networks have been receiving increasing demand from users and are now accepted as a standard way of distributing information, because its architecture enables scalability, efficiency and performance as key concepts. A peer-to-peer network is decentralized, self-organized, and dynamic in its pure sense, and offers an alternative to the traditional client-server model of computing. Client-server architecture enables individuals to connect to a server – but although servers are scalable, there is a limit to what they can do. P2P networks are almost unlimited in their scalability.

In “pure” P2P systems, every node acts as a server and client – and they share resources without any centralized control. However most P2P applications have some degree of centralization. These are called “hybrid” P2P networks and they centralize at least the list of users. This is how instant messengers or file sharing programs work – the system keeps a list of users with their IP addresses.

Different applications of P2P networks enable users to share the computation power (distributed systems), data (file-sharing), and bandwidth (using many nodes for transferring data). P2P uses an individual’s computer power and resources, instead of powerful centralized servers. The shared resources guarantee high availability among peers. P2P is a really important area to research, because it has a huge potential in distributed computing. It is also important for the industry as well, as new business models are being created around P2P.

P2P Standards

The key thing for the architecture of P2P networks is to achieve reliability, efficiency, scalability and portability.

For the moment there are no standards for P2P application development, but standards are needed to enable interoperability. Sun has tried to implement a framework basis called JXTA, which is a network programming and computing platform for distributed computing. Sun was the first company to try and develop standards for P2P, but surely other companies will also try to implement their own standards. Microsoft, Intel and IBM are investing and working in their research laboratories on P2P supported application frameworks or systems. It is an open area where no standards are accepted yet.

Gnutella

Gnutella has been used in many applications to allow connecting to the same network and searching files in a centralized manner. It’s an open, decentralized search protocol for finding files through the peers. Gnutella is a pure P2P network, without any centralized servers.

Using the same search protocol, such as Gnutella, forms a compatible network for different applications. Anybody who implements the Gnutella protocol is able to search and locate files on that network. Here’s how it works. At start up, Gnutella will try to find at least one node to connect to. After the connection, the client requests a list of working addresses and proceeds to connect to other nodes until it reaches a quota. When the client searches for files, it sends the request to each node it is connected to, which then forwards the request to the other nodes it is connected, until a number of “hops” occurs from the sender.

According to Wikipedia, as of December 2005 Gnutella is the third-most-popular file sharing network on the Internet – following eDonkey 2000 and FastTrack. Gnutella is thought to host on an average of approximately 2.2 million users, although around 750,000-1,000,000 are online at any given moment.

The industry use P2P networks in many different ways, each with different business model and different infrastructure. So now let’s look at some real world applications for P2P…

Instant Messaging

The first adopted usage of P2P applications was instant messengers. Back in the early days of the internet, people used gopher and IRC servers for communication. These technologies could only handle a certain number of users online at the same time, so there were delays for communicating whenever the server was approaching its limits. However the use of P2P changed the whole idea of IM. The bandwidth was shared between users, enabling faster and more scalable communication.

File Sharing

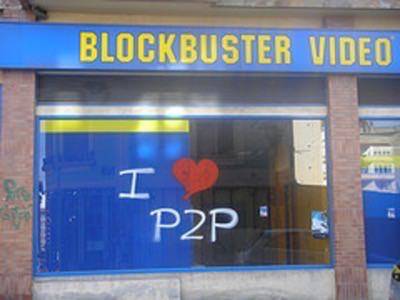

The peer-to-peer file sharing era started with Napster and continued with much more powerful applications such as Kazaa, Gnutella. These programs brought P2P into the mainstream. Although some P2P file-sharing applications have stopped because of legal issues, there is still a high demand in the industry. Now Napster has gone ‘legit’ and there are new media P2P apps like Joost (P2P TV) arriving on the scene. We will discuss this more in the next post.

Collaborative Community

Document sharing and collaboration is really important for a company. This issue has tried to be solved by internal portals and collaboration servers. However the information has to be up to date and with portals this wasn’t always possible. Collaboration with P2P broke that barrier, by using peoples computer resources instead of a centralized server.

Groove is a software with P2P capabilities which was acquired by Microsoft in April 2005. Groove is now offering Microsoft Office based solutions, mainly using P2P for document collaboration. It also allows the usage of instant messaging and integration with some video conferencing solutions. It provides user and role based security, which is one of the most important aspects of P2P for an organization. Groove is also a Äúrelay serverÄ? to enable offline usage.

IP Telephony

Another major usage of P2P is IP telephony. IP telephony revolutionaries the way we use the internet, enabling us to call anywhere in the world for free using our computers.

Skype is a good example of P2P usage in VoIP. It was acquired by eBay in 2005. Skype was built on top of the infrastructure of P2P file-sharing system, Kazaa. The bandwidth is shared and the sound or video in real-time are shared as resources. The main server exists only for the presence information and billing users of the system whenever they make a call that has charges (e.g. SkypeOut).

High Performance Computing – Grid Computing

High performance computing is important for scientific research or for large companies. P2P plays a role in enabling high performance computing. Sharing of resources like computation power, network bandwidth, and disk space will benefit from P2P.

Hive computing is similar – it is where millions of computers connecting to the internet can form a super computer, if it is successfully managed. One of the popular projects is SETI@HOME (Search for Extraterrestrial Intelligence), which enables users to search for extraterrestrial intelligence. It is a voluntary project with more than 3.3 million users in 226 countries – it has used 796,000 years of CPU time and analyzed 45 terabytes of data in just two and a half years of operation.

Some industrial projects also exist in this area. Datasynapse is charging users for the CPU cycles they use. Open Source projects also exist, like Globus and Globus Grid Forum.

Coming soon…

In Part 2, we will explore future applications of P2P. In the meantime, let us know which real world applications you use right now and what you think of the P2P industry in general.

Image credit: RocketRaccoon