The one really big problem with file systems designed for compatibility with PCs – and by that, I mean IBM Personal Computers – truly is the “big” problem. They do not scale, and as the size of databases expands far beyond the capacity of any cluster of storage devices, let alone any single device, a new class of “sharding” technologies has had to be deployed to let fragments of huge virtual volumes to be stored in multiple systems. This is, in fact, what much of cloud storage technology is all about.

Two weeks ago, Microsoft acknowledged something it had hinted at during its Technology Preview for Windows 8 last September: It will be integrating a simplified form of storage pooling technology called Storage Spaces into Windows 8. Late yesterday in an MSDN blog post, engineer Surendra Verma, expanded on that theme by revealing new details about a very-high-capacity file system alternative for Windows Server 8, based around a modified resiliency architecture that should be in-place compatible with the existing NTFS.

The concept of resilience, or resiliency, in system architecture is based on tolerance and failure allowance. As the number of volumes encompassed by a huge file increases linearly, the threat of the file losing continuity increases exponentially. Resilience architecture utilizes the principle that certain failures must be fully expected, so systems must be planned for redundancy and loss avoidance.

Microsoft’s new system, to be premiered in Windows Server 8 (which will be tested throughout 2012 and probably rolled out generally in 2013), is entitled ReFS. It is not, despite how its title may sound, a resurrection of the WinFS file system that Microsoft announced way back in 2003. That would have been an object file system geared for distributed search, and which the company hinted at the time could eventually render the use of Google search in the enterprise unnecessary.

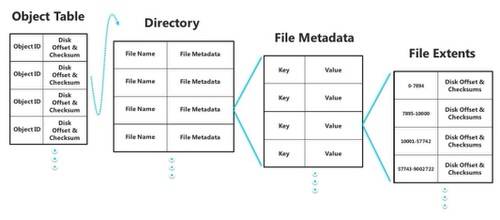

ReFS is not about metadata or object storage in the sense of representing files as objects with characteristics. Rather, it’s a multi-tier system of key/value pairs represented as B+ trees, with the pairs written as tables. A main object table serves as a root index, comprised of root trees, each of which represents a storage structure.

A “file” in ReFS looks to the rest of the operating system like a file in NTFS. Inside the file system, however, the directory table doesn’t point directly to files, but instead to a B+ tree structure. That structure may be comprised of metadata tables that each point to various separate components of the file (pictured above) or perhaps to an access control list (ACL) designating access rights and privileges.

The implication here is that scaling the scope of any ReFS file, even beyond the sizes we’re becoming acquainted with now, could be a simple matter of scaling the metadata tables. In Microsoft’s new resilience architecture, the metadata that describes the identity and location of actual segments of the file – the parts containing the real bits – is never overwritten on top of itself. Or to use the company’s terminology, metadata is never written “in place.”

“To maximize reliability and eliminate torn writes, we chose an allocate-on-write approach that never updates metadata in-place, but rather writes it to a different location in an atomic fashion,” Verma writes. “In some ways this borrows from a very old notion of ‘shadow paging’ that is used to reliably update structures on the disk. Transactions are built on top of this allocate-on-write approach. Since the upper layer of ReFS is derived from NTFS, the new transaction model seamlessly leverages failure recovery logic already present, which has been tested and stabilized over many releases.”