Where’s your site? In a server sitting in the back room, most of us would say, or at a host provider across the country or even (if you’re planning for disaster) scattered across cloud instances around the world.

The truth is we usually don’t know — and not knowing could set us up for a world of hurt.

That’s because many sites depend on external Web services that, together, make up each site. Services such as third-party advertising, analytics, social-media controls or newsfeeds, just to name a few. If any of those services crash or flake out, they can take down, slow down or generally give sites the appearance of suck.

Given the recent GoDaddy and Amazon EC2 East outages, and the coding problems with Facebook’s Like button that halted the loading of many client sites, administrators need to pay a lot more attention to what goes into their final site experience, because an outage or failed service can have a domino effect.

Missing The Obvious

It’s a serious issue for Web administrators, though not one that comes up in covversation until there’s a problem. In a way, it’s analogous to riding in a hot-air balloon and realizing that, oh yeah, that 50-gazillion-BTU propane torch two feet from your head will be pretty darn hot. It’s obvious when you are standing there in the basket and your scalp is getting cooked, but when you see the pretty, serene pictures of the graceful balloon in flight, it doesn’t really occur to you to wear a hat to avoid first-degree burns.

The analogy holds true for sites. Add some Twitter buttons or a Facebook Like button or too, throw in some banner ads, a little Google Analytics monitoring and suddenly your site is now dependent on four additional hosts in order to work at peak efficiency. It’s obvious when you think about it, but is it on your list of things to care about?

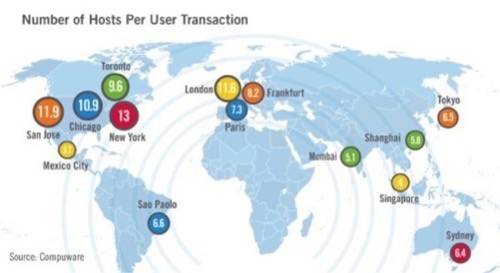

Complicating the situation even further is the fact that the number of hosts that a site owner has to actually touch can changes depending on where the site is located. Cloud application-monitoring service Compuware has illustrated this pretty neatly in the map below, which shows the average number of hosts that contribute to a typical web transaction in the locations shown (based on over 250 million Web measurements).

Most of the additional hosts are third-party services that sites in these locations tie into in order to deliver the full experience. With some sites dependent on 10 hosts or more, the chances for some kind of failure goes up significantly, explained Compuware’s Stephen Pierzchala, APM Technology Strategist.

A Bad Taste For Visitors

“A site is like a big pot of stew,” Pierzchala said. “If one ingredient tastes bad, it can ruin the whole stew.” And there are a lot of ingredients on most sites.

An analytics script can fail, causing the site to load slowly, or a content-delivery network that the site uses can fail, making the site crawl for in one part of the world. Or, as with what happened last May with a faulty Facebook Like button that admins embedded in sites, pages could simply stop loading after the Like button appeared, Pierzchala said.

The take-away for admins is the importance of knowing what’s on a site. What are the components and where do they come from? Just as important, who’s putting stuff on your site? If content is auto-fed to your pages from an outside provider, what happens when its server borks?

Once you have identified the third-party providers, “take control of your third parties. Don’t let them control you,” Pierzchala emphasized.

Identify what-ifs. Build fail-safes into your site’s code to work around failures, and set up lines of communication with service providers to ensure you can learn about problems from them, and before you hear from disgruntled visitors. Knowledge is a powerful thing to have when things go awry.

Because when you site fails, all that your visitors will know is that you and your brand — not some host sitting halfway across the world — disappointed them.

Images courtesy of Shutterstock and Compuware.