In part 2 of my one-on-one interview with Tim Berners-Lee, we explore a variety of topics relating to Linked Data and the Semantic Web. If you missed it, in Part 1 of the interview we covered the emergence of Linked Data and how it is being used now even by governments.

In Part 2 we discuss: how previously reticent search engines like Google and Yahoo have begun to participate in the Semantic Web in 2009, user interfaces for browsing and using data, what Tim Berners-Lee thinks of new computational engine Wolfram Alpha, how e-commerce vendors are moving into the Linked Data world, and finally how the Internet of Things intersects with the Semantic Web.

Semantic Web and Search Engines Like Google, Yahoo

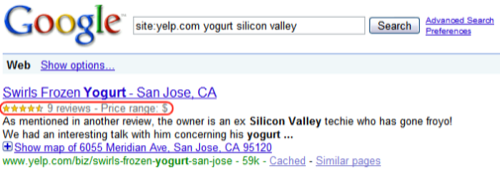

RWW: You’ve been talking about the Semantic Web for many years now. Generally the view is that Semantic Web is great in theory, but we’re still not seeing a large number of commercial web apps that use RDF (we’ve seen a number of scientific or academic ones). However we have begun to see some traction with RDFa (embedding RDF metadata into XHTML Web content), for example Google’s Rich Snippets and Yahoo’s SearchMonkey. Has the takeup of RDFa taken you by surprise?

TBL: Not really, but the takeup by the search engines is interesting. In a way I was happy to see that, it was a milestone for those things to come out of the search engines. The search engines had typically not been keen on the Semantic Web – maybe you could argue that their business is making order out of chaos, and they’re actually happy with the chaos. And if you provide them with the order, they don’t immediately see the use of it.

“The search engines have not been keen on the Semantic Web […] their business is making order out of chaos, and they’re actually happy with the chaos.”

Also I think there was misunderstanding in the search engine industry that the Semantic Web meant metadata, and metadata meant keywords, and keywords don’t work because people lie. Because traditionally in information retrieval systems, keywords haven’t proven up to the task of finding stuff on the Web. One of the reasons is that people lie, the other is that they can’t be bothered to enter keywords. So keywords have gotten a bad reputation, then metadata in general was tarred with this ‘keywords don’t work’ brush. Because a lot of Semantic Web data included metadata, then people thought that with Semantic Web data — again, that people will lie and won’t have the time to produce it.

Google rich snippets example; image credit: Matt Cutts

Now I think there’s a realization that when you’re putting data online, that people are motivated NOT to lie. For example when your band is going to produce its next album, or when your band is going to play next downtown, you’re motivated to put that information up there on the Semantic Web. There’s an awful lot of cases when actually data is really important to people; and it’s on the web anyway. So I think it’s great that some of the search engine companies are starting to read RDFa.

Does this mean that they [search engines] will start to absorb the whole RDF data model? If they do, then they will be able to start pulling all of the linked data cloud in.

“The web of linked data and the web of documents actually connect in both directions, with links.”

Will they know what to do with it? Because when it’s data in a very organized form, I think some people have been misunderstanding the Semantic Web as being something that tries to make a better search engine – i.e. when you type something into a little box. But of course the great thing about the Semantic Web is that you can query it, you can ask a complicated query of the Semantic Web, like a SQL query (we call it a SPARQL query), and that’s such a different thing to be able to do. It really doesn’t compare to a search engine.

You’ve got search for text phrases on one side (which is a useful tool) and querying of the data on the other. I think that those things will connect together a lot.

So I think people will search using a search text engine, and find a webpage. On the front of the webpage they’ll find a link to some data, then they’ll browse with a data browser, then they’ll find a pattern which is really interesting, then they’ll make their data system go and find all the things which are like that pattern (which is actually doing a query, but they’ll not realize it), then they’ll be in data mode with tables and doing statistical analysis, and in that statistical analysis they’ll find an interesting object which has a home page, and they’ll click on that, and go to a homepage and be back on the Web again.

So the web of linked data and the web of documents actually connect in both directions, with links.

User Interfaces for Semantic Content

RWW: At the recent SemTech conference, Tom Tague of Thomson Reuters’ Calais project suggested that user interfaces for semantic content are key in getting more take-up. With that in mind, I wonder if you’ve seen some great interfaces or designs for semantic applications in recent months – if so which ones and why did they impress you?

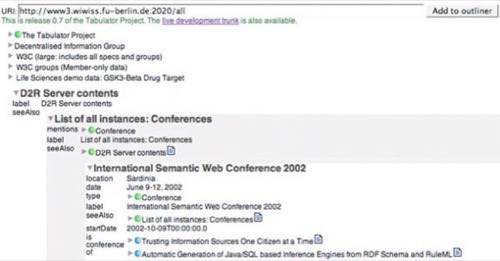

TBL: I think that whole area is very exciting at the moment. The only piece of hacking I’ve done over the past few years has been on a thing called the Tabulator [a data browser and editor], which is addressing exactly that. Partly because I wanted to be able to look at this data. And now there are lots of different ways that people need to be able to look at data. You need to be able to browse through it piece by piece, exploring the world of data. You need to be able to look for patterns of particular things that have happened. Because this is data, we need to be able to use all of the power that traditionally we’ve used for data. When I’ve pulled in my chosen data set, using a query, I want to be able to do [things like] maps, graphs, analysis, and statistical stuff.

W3C Tabulator, a data browser/editor; Image credit: wiwiss.fu-berlin.de

So when you talk about user interfaces for this, it’s really very very broad. Yes I think it’s important. There’s also the distinction we can make between the generic interfaces and the specific interfaces.

There will always be specific interfaces; for example if you’re looking at calendar data, there’s nothing else like a calendar that understands weeks, months and years. If you’re looking at a genome, it’s good to have a genetics-specific user interface.

“I want to be able to do maps, graphs, analysis, and statistical stuff.”

However you also need to be able to connect that data, through generic interfaces. So if my genome data was taken during an experiment which happened over a particular period, I need to be able to look at that in the calendar – so I can connect the genetics to the calendar.

So one of the things I hope to see is domain-specific things for various different domains, and the generic user interfaces. And hopefully the generic interfaces will be able to tie together all of the domains.

Wolfram Alpha and Natural Language Interfaces

RWW: An interesting new product was launched this year called Wolfram|Alpha, described as a ‘computational knowledge engine.’ It’s kind of a mix between Google (search) and Wikipedia (knowledge), and its key attribute is that enables you to compute something. The founders think that ‘computing’ things on the fly is something we’re going to see a lot of in future. What’s your take on Wolfram|Alpha?

TBL: There are two parts to that sort of technology. One of them is a sort of stilted natural language interface. We’ve seen those sort of natural language queries for years. Boris Katz [from W3C] created a system called START[a software system designed to answer questions that are posed to it in natural language]. I think with the Semantic Web out there, those sorts of interfaces are going to become important, very valuable, because people will be able to ask more complicated things. The search engine has traditionally been limited to just a phrase, but some of the search engines are now starting to realize that if they put data behind them and have computation engines, then you can ask things like ‘what’s this many pounds in dollars?’ and so on. So yes, those interfaces will become important.

“Those sorts of interfaces will become important […] people will be able to ask more complicated things.”

Conversational interfaces have always been a really interesting avenue. We’ve had voice browser work in W3C, that has been an interesting alternative avenue. It’s possible that as compute power goes up, we’ll see a prolifieration of machines capable of doing voice. It’ll move from the mainframe to being able to run on a laptop or your phone. As that happens, we’ll get actual voice recognition and pattern natural language at the front end. That will perhaps be an important part of the Semantic Web.

We talked before about what a great challenge the Semantic Web is going to be from a user interface point of view. Conversational interfaces are going to be part of [solving] that. Of course it’s also going to be really valuable to have compositional interfaces – for the visually impaired and so on.

Wolfram|Alpha is also a large curated database of data sets. Obviously I’m interested in the big data set which is out there, which is Linked Data. This everybody can connect to. I don’t really know a lot about the internals of Wolfram|Alpha’s data set. I don’t know whether they’re likely to put any of it out on the web as Linked Data – that might be an interesting addition. I imagine that quite a lot of it may have come from the web of Linked Data.

e-Commerce and Linked Data

RWW: There have been reports recently that both Google and Yahoo will be supporting the Good Relations ontology and linked data for e-commerce. Companies such as Best Buy are already putting out product information in RDFa. What would be your advice to e-commerce vendors right now, to help them transition to this world of structured data on the Web. The same question could be asked across many verticals, but e-commerce seems like one area which has some momentum right now. Would you advise them just to put out their data as Linked Data?

TBL: Yup! Certainly this year is the year to do it. I’ve been advising governments to do it and when you look at an enterprise, you find that a lot of the issues are the same. But when you put your data from government or enterprise out there, make sure you don’t disturb existing ecosystems. Don’t threaten those systems, because you’ve spent years building them up.

Maybe there’s an analogy with when the Web first started and the first bookshops went online. They were more or less a flyer, saying ‘hey we have a great bookshop at 23 Main St, come on down!’. Let’s say that a person named Joe owned one of these early online bookshops. If somebody had suggested to Joe that he should put his catalog online, Joe would’ve felt that that was very proprietary data. And he’d be worried that other bookshops would see where he was weak, so they’d be able to advertise themselves as filling that niche he’s weak in.

“When you put your data out there, make sure you don’t disturb existing ecosystems.”

But when his competitors Fred and Albert put their catalogs online, then Joe can check which books people are browsing at Fred and Albert’s websites. So Joe would [finally] be pursuaded to put his book catalog up online. But he doesn’t put up the prices… until Albert and/or Fred does. And even if catalog and pricing is up there, nobody puts their stock levels online. And there was a period of time when nobody [i.e. online booksellers] had their stock levels up. But people got fed up with ordering stuff that wasn’t in stock. So the first book shop to actually tell you about stock levels suddenly was then unbelievably attractive to its customers.

So there’s this syndrome of progressive competitive disclosure. This happens when people realize that if you’re going to do business with somebody, if you’re going to have your partners up and down the supply chain, really it’s useful to check the data web – and life goes much more quickly and open.

Best Buy may be what starts the ball rolling [among e-commerce vendors]. Now if I want to look out for what [products are] available, I can write a program to see what there is. If somebody wants to compete with Best Buy, to my program they’ll be invisible unless they can get their data up in RDF. Doesn’t matter whether they use RDFa or RDF XML, as long as it maps in a standard fashion to the RDF model, then they will be visible.

The Internet of Things

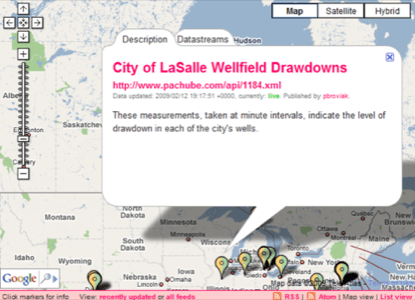

RWW: I’m fascinated by how the Internet is becoming more and more integrated into the real world. For example the Internet of Things, where everyday objects become Internet connected via sensors. Have you been following this trend closely too, and if so what impact do you think this will have on the Web in say 5 years time?

TBL: It connects very much with Semantic Web [and] with linked data. With Linked Data you’ve got the ability to give a thing a URI. So I can give a URI to my phone, and I can say that’s my phone in Linked Data. And also the company that made it can give a URI to the model of the phone. They can also put online all the specs of the phone, and then I can make a link to say that my phone is an example of that product. So now any system which is dealing with me and has access to that data will be able to figure out the sorts of things I can do with my phone, which actually is really valuable. Especially if the phone breaks.

“The Semantic Web is a web of things, conceptually. Tying an actual thing down to a part of the web is the last mile.”

The Semantic Web has already given URIs to things, and to types of things. When the things themselves have an RFID chip in them, then I think it’s a very exciting world. One can take that RFID chip, go to the Internet and find out the data about the thing. Whether we’ll be able to do that, whether the manufacturers will be open enough to allow me to turn data about the identifier of the thing into data about the thing, is yet to be seen. But it’s a very exciting idea.

Pachube, an example of the Internet of Things (see ReadWriteWeb profile)

Similarly, I’d like to be able to scan a barcode and get back nutritional information about what’s in – for example – a can of food. But we don’t have that yet. To get that sort of thing, which is very powerful, we need to build look-up systems, which allow you to translate an RFID code or a barcode into an HTTP address.

The Semantic Web is a web of things, conceptually. Tying an actual thing down to a part of the web is the last link – the last mile. Give the thing a notion of its own identity in the web.

Conclusion

RWW: The over-riding message in both Part 1 and 2 of our interview with Tim Berners-Lee, is for companies and organizations to make their data available online. Preferably as Linked Data, which uses a subset of Semantic Web technologies. But Berners-Lee noted, in Part 1 of our interview, that he’d even be happy with the data in CSV (comma separated values) format.

It’s clear that we’ve seen a lot of progress in linked data already in 2009. In upcoming posts on ReadWriteWeb, we’ll continue to track this trend and explain how organizations can contribute their data.