Guest author Kyle Samani is the cofounder and CEO of Pristine, a maker of video-based field-service solutions.

Augmented reality (AR) and virtual reality (VR) are becoming hot buzzwords in the tech ecosystem. Giant companies are placing bets and investors are pouring capital into the space.

Facebook bought Oculus for a cool $2 billion in 2014. Google has been showcasing Glass since early 2012 and continues to invest in Glass, contrary to popular belief. Microsoft, Sony, Toshiba and Samsung have announced their own smartglasses. Nearly every major venture capitalist in Silicon Valley invested in Magic Leap, and a host of startups such as Atheer, Meta, Optinvent, Recon, Vuzix, Epson, ODG, and others are also building smart glasses. The space is on fire.

But what defines and distinguishes augmented reality and virtual reality? Let’s start with Merriam-Webster:

[Augmented reality is] an enhanced version of reality created by the use of technology to overlay digital information on an image of something being viewed through a device (as a smartphone camera)

[Virtual reality is] an artificial world that consists of images and sounds created by a computer and that is affected by the actions of a person who is experiencing it

It’s very clear that Oculus is a VR technology and not AR. Similarly, it’s also very clear that everything other than Oculus listed above is not VR. But are these technologies all then AR?

Many hardware manufacturers and media would argue that most of the technologies listed above are AR. Yet most are not.

Heads Up: That’s Not Augmented Reality

Here’s where I differ with the dictionary: I believe true AR technologies superimpose an image on a user’s view of the real world. Many of the devices listed above cannot superimpose an image on reality in a way that intelligently blends the virtual objects into the real world.

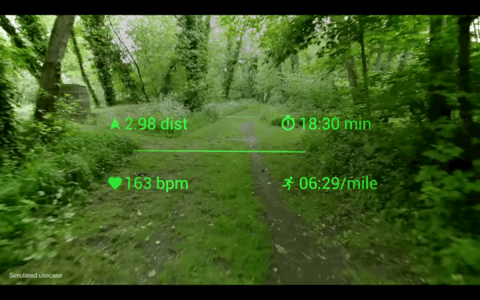

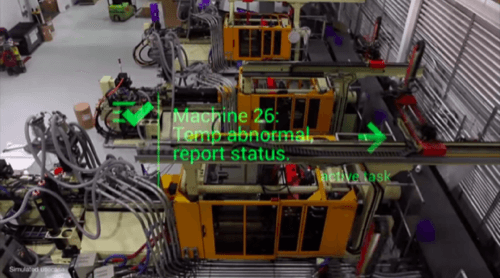

Rather, these devices simply render basic text and data that happens to be readable hands free. Those are heads-up displays, not AR devices. For example:

To me, these images do not demonstrate real AR experiences. Why do you need to see your heart rate in your direct line of sight? Is that data core to the running/cycling experience at hand, or is it just convenient, glanceable data? Why does the temperature alert need to be central to your line of sight? Why can’t it be glanceable in the upper right-hand corner of your vision?

These experiences are not AR. Instead, they are, as I like to jokingly like to call them, the equivalent of “gluing a phone to your face.” It sounds crude, and that’s exactly the point.

Towards A Smarter Definition Of AR

What, then, is an intelligent definition of augmented reality? Augmenting reality should imply not just showing information on the screen, but actually layering the information on top of reality in a spatially intelligent way.

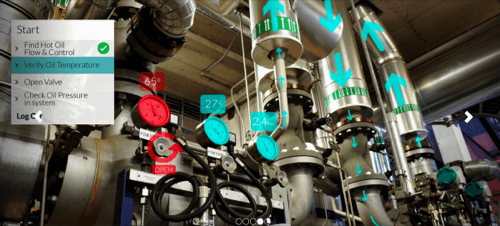

This rendering from Atheer showcases true AR—the actual layering of data on top of physical objects in a spatially aware way. The blue arrows need to be shown precisely where they are; if you walked 10 feet to the right and looked at the same series of tubes, the arrows and data would need to intelligently adjust based on your new location and line of sight. Otherwise, all of the arrows and data would be incorrect.

But why not just list the data in bullet form in your peripheral vision? Why bother layering it on top of physical objects at all? It’s a great question that depends on the density of, type of, and use case for the information you need.

In the case of the pressure gauges above, those don’t need to be layered intelligently onto reality, although it is pretty cool to see them that way. They could just be shown in your peripheral vision as text, as follows:

- Motor 1: 65C

- Motor 2: 27C

- Motor 3: 2.4 bar

On the other hand, the directional arrow flows are spatially and visually contextual; describing the liquid flows without visual context would be significantly less meaningful and useful to an operator in that facility. It could be done, but it would be significantly less useful to verbally explain the flows than to simply illustrate the flows.

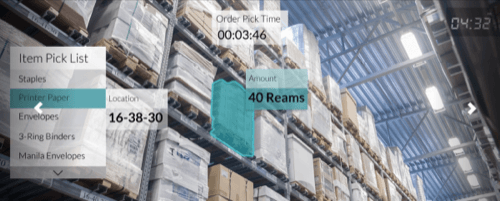

Here’s another example, courtesy of Atheer:

In this example, showing text in your peripheral vision would be inadequate. Without any visual context, how would you describe location 16-38-30? The smart text layered onto the visual context is imperative for this example to make sense.

See also: The Greatest Potential—And Obstacle—For Wearables At Work

True AR is several orders of magnitude more complicated than “gluing a phone to your face.” Why? Because true AR is predicated on elegant and accurate optics (which are not there yet), eye tracking (not integrated into any production hardware yet), and sophisticated computer vision, which in turn requires powerful processors, which in turn require better heat dissipation mechanisms and larger batteries. Practical, polished AR is still years away from commercialization.

Tricks like showing your heart rate, an ultrasound rendering, or a data feed from a pump in your line of site don’t require any smarts. They just require some relatively basic integrations and modest redesign of the user experience.

Why does any of this matter? We’re witnessing incredible innovation in the smart eyewear space at every level of the stack, with tech giants and startups building every conceivable type of business application.

The vast majority are still stuck at the “glueing a phone to your face” user-interaction model. That’s fine. It’s not a bad thing. It just means that enterprise buyers and the teams building applications need to understand the underlying user-experience restrictions they face and design for them accordingly.

This also means that the smartglasses space is even less than nascent. We are today in smartglasses application maturity where smartphone apps were in the early 2000s. The iPhone moment has not yet arrived.

The first wave of companies are building “glue a phone to your face” applications. These apps are the low-hanging fruit. There is tremendously more value that still waiting to be unleashed.

Lead image courtesy of Microsoft; other images courtesy of Pristine and Atheer