Editor’s Note: This was originally published by our partners at Kill Screen.

“Can machines think?” This is the question that Alan Turing, perhaps the first true computer scientist, posed to us almost 65 years ago and one that we’re still grappling with today as digital machines become (or rather, are made) more and more human.

But the question itself raises many more questions. Which machines do we consider? Certainly I don’t need to worry about the thoughts of my microwave or my car, right? And what even is thought? I’ve spent hours at the DMV or on Twitter considering if humans are really capable of thought.

For more stories about video games and culture, follow@killscreen on Twitter.

Turing himself readily admitted that the question itself could lead to absurd abstractions and answers. In fact, Turing thought that the question might be best replaced by an entirely different formulation, an “imitation game,” which inspired the recent movie of the same name.

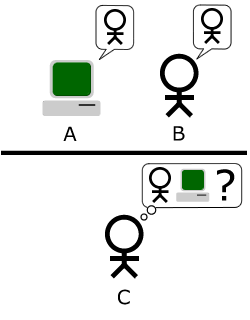

Turing proposed a game where a man (subject A) and a woman (subject B) go into separate rooms and a third party (subject C) must then determine which of the two is the woman only by typed or transcribed communications. But then Turing proposed replacing subject A with a machine. Would it be possible for a third party, the interrogator, to perform equally well against the machine?

Turing offers this as his solution to the idea of machine thought. In his 1950 article “Computing Machinery and Intelligence,” Turing proposes the beginning of what will become known as the “Turing Test” where a machine replaces the man but the woman remains. The goal of the computer is to act deceptively and direct the interrogator to the wrong choice, as the man did when he was subject A. Later, in the same article, Turing proposes another variation where a man competes with a machine in attempting to deceive a human.

See also: “Mr. Windows” Bets Big On The Mesosphere Datacenter OS

James Lipton, who serves as vice-chair and professor of Computer Science at Wesleyan University, points out that, “Turing uses the word ‘think,’ and not necessarily the words ‘have consciousness.’ He figured that astute questioning would be impossible to respond to unless there was thinking involved.”

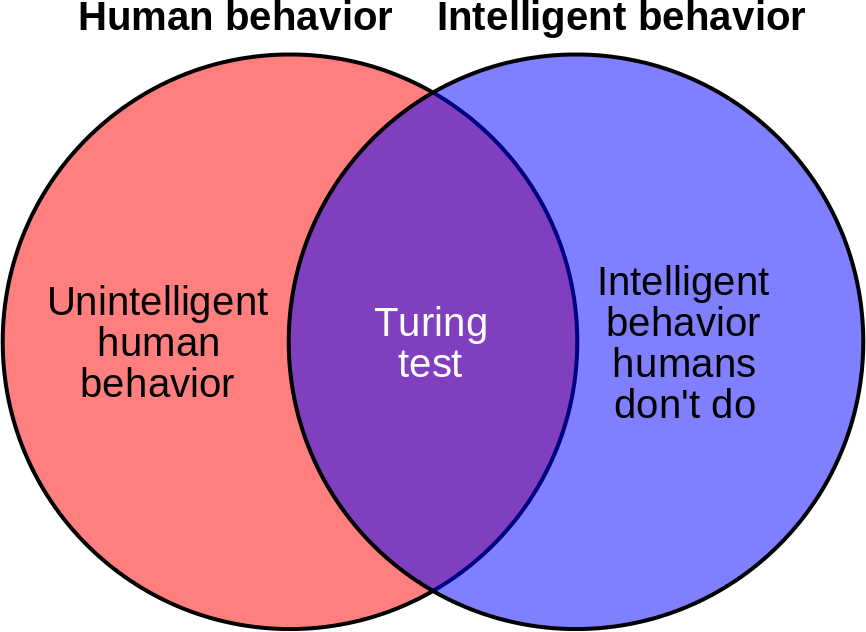

However, over time, this original test has been superseded in our collective mind by the question, “Can a machine reliably trick a human into believing that it is a human?” At the very heart of the question lies another: can a machine reliably act human? This is what most think of when they hear of the “Turing Test,” despite it never being truly stated by the man himself. This is the question that computer scientists have competed over and developed software to solve. It’s the one that has spawned chatterbots such as Eugene Goostman, PARRY, and ELIZA.

We Want To Believe

All three of these bots operate on a similar principle. Each “knows” a certain amount of real-world knowledge and uses language processing to estimate what the user has said and then respond with something somewhat sensible—thus simulating human-to-human interaction. Each also has a sort of known peculiarity that attempts to make up for the fact that as machines they may behave in peculiar ways.

ELIZA, the first of the widely known chatbots, is a therapist who answers many questions with a question or a follow up. She resists needing a large database by deflecting questions back onto the questioner. PARRY acts like a paranoid schizophrenic, providing context for outlandish or nonsensical responses. Most recently, Eugene Goostman is supposed to emulate a thirteen-year-old Ukrainian boy, and as such questioners are more likely to forgive poor grammar or responses that seem off. Instead, they can rationalize any otherness by reminding themselves he’s a young boy with a limited knowledge of the English language.

To an extent, all of these chatterbots have “passed” the Turing Test that they sought out to conquer. Many people may even consider the brief moments that they were convinced SmarterChild was an actual human until they actually asked if it was a human or not. The reality of the situation is that it is not particularly difficult to convince a human if something is a human. Because of this many have called the Turing Test into question, wondering how useful it is, considering the ease of accomplishment.

See also: Popular Coding Framework Node.js Is Now Seriously Forked

In fact, as a species we all suffer from a relatively large and inescapable facet of our own humanness—pareidolia, that is, the perception of random stimulus as significant. This is why we see a face on the moon or feel the sadness of pain au chocolat. Carl Sagan thought this was a survival measure, but most people could tell you that a baby never needs to be taught what a human is; it simply knows. As a species, we seek out order to randomness and in doing so we see humanness in a vacuum.

Winograd schemas have somewhat emerged to fill this void. These tests use pronouns that may be vague to a machine but easily deciphered by a human. One such example is that “The delivery truck zoomed by the school bus because it was going so [fast/slow]. What was going so [fast/slow]?” A human could fairly easily interpret that when “fast” is used that “it” refers to the truck and when “slow” is used then “it” refers to the bus.

University of Toronto computer scientist Hector Levesque claims that a Winograd schema test because “a machine should be able to show us it is thinking without having to pretend to be somebody.” However, Turing himself was clear that all that was required was a sufficiently advanced machine and program. In other words, Winograd schemas still don’t test intelligence, they test the ability to pass the test.

How ‘Humanness’ Is Defined

This is why it’s important to remember that this was not the test that Turing imagined; it’s one that has sort of emerged over time. The original imitation game is unlike the more common Turing Tests in that in both formulations the interrogator is not attempting to discern between a human and a machine. In the original formulation the interrogator is attempting to discern which of the two players is female, and, thus, the human player can fail. In fact, in a one-to-one test “humanness” is entirely defined by the interrogator’s preconceived notions of humanness and thus based on deception. The original two-to-one test is relational.

Since the human player can fail at attempting to either convince or deceive the interrogator they must display more than general knowledge: they must be clever. “Fooling someone of your gender requires a sophisticated knowledge of cultural cues,” said Professor Lipton. In this way a machine would have to display reason. Thus, in order for a machine to pass this imitation game it must do more than act human; it must demonstrate a level of cleverness that is, well, human.

In reaction to the Turing Test, philosopher John Searle proposed the thought experiment known as the Chinese Room. Whereas Turing considered “thought” Searle questioned whether a machine could have a “mind” or a consciousness. He proposes that given a man in a locked room with a sufficiently sophisticated dictionary or rulebook might be able to receive questions in Chinese and then respond to those questions accurately with the rulebook. Thus, the man in the room would be able to convince the interrogator that he can speak Chinese despite having no ability to comprehend or understand the meaning of the responses he is making.

Searle believes that such a program could pass a Turing Test but in no way understand what it has done. This leaves us wondering: What is the point of a machine intelligence if it cannot understand the responses it is providing? Without an understanding of semantics involved is a machine intelligent? Certainly ELIZA had no means of understanding such things; it was designed to dodge them. The same with Eugene Goostman. Both evade true understanding by misdirection and misunderstanding because they simply lack the capacity for it.

See also: How Not To Manage An Open-Source Community, Courtesy Of Docker

Further complicating the Chinese Room is that the human involved is intelligent, despite the fact that it has no way of communicating that intelligence. Professor Lipton said, “A critique of the Turing Test might be that it doesn’t get at whether or not there is a consciousness there. Does the machine have sense impressions [such as sight or touch] that it experiences? Does it see the color red? Does it have a sense of self? That may not be possible to ascertain.”

Here Lipton suggests that philosopher Thomas Nagel might be useful. In Nagel’s seminal 1974 work “What is it like to be a bat?” Nagel points out that despite our intense interrogation or investigation there may still lie an element of being a bat that is impossible for us to know. Thus, according to Lipton, “There is a first-person quality of consciousness” that the Turing Test may not be able to address.

More From Kill Screen

- Snuggle Up And Melt A Cat In Your Hot Cocoa This Winter, You Deranged Monster

- It’s Finally Happened: Ubisoft Broke Assassin’s Creed

- The Detail Is One Of The Darkest Games We’ve Ever Played

For more stories about video games and culture, follow@killscreen on Twitter.

Photo Credits – Header, Graphic, ASIMO, Venn Diagram