The integration of sensors with social networks will lead to real-time data and more useful web apps.

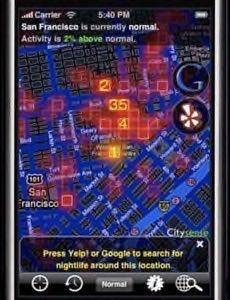

In recent posts we reviewed an MIT experiment called WikiCity, that gathered real-time location data from mobile phones in Rome and graphically mapped trends from it. We then looked at a more commercial product doing similar real-time location data analysis, called Citysense. That product aims to let users find the most popular night spots in San Francisco and the most efficient ways to get to them. The next stage of projects/products such as Wikicity and Citysense will be to enable users to social network, using data from sensors as one input.

Citysense is already heading in that direction, with the next release of its product aiming to guide ‘tribes’ of people together using location data. It will soon be able to show not only where anonymous groups of people are in real time, but where people with similar behavioral patterns to you are. To do this, Citysense will categorize people into “tribes”. So far, 20 tribes have been identified, including “young and edgy,” “business traveler,” “weekend mole,” and “homebody.” It will use not only GPS (location) data from mobile phones and taxis, but also publicly available company address data and demographic data from the U.S. Census Bureau.

Emerging Trend: Integrating Social Networks and Sensor Networks

Sensors have become much more prevelant in mobile devices over the past few years. This means that when we talk about sensors, we’re not necessarily talking about the microchip embedded in your fridge door. Increasingly, sensors are attached to a human via their mobile phone. Both the Apple iPhone and the Nokia N95 contain GPS and accelerometer sensors. Sensor data is transmitted via GPS if available or via Wi-Fi positioning techniques.

In a recent W3C Workshop on the Future of Social Networking, held in Barcelona in January, the trend of sensors mixing with social networks was discussed. An accompanying paper entitled Integrating Social Networks and Sensor Networks provides some useful information. Again, these are mostly research projects right now so not much has filtered into the commercial Web yet.

One application for sensors in social networks is to help people to meet others, using alerts based on their location at a particular time. These alerts could be triggered by either explicit opt-in by the user, or by implicit means. An example of the latter is a user receiving an alert on their mobile phone when someone that they exchange messages with on a blog is in the same room. There would need to be the appropriate permissions and privacy controls in place, of course – and this is one of the challenges that these applications are facing.

Sensors Aren’t Just About The Socializing

Social networks are often still thought of as fun, consumer applications such as Facebook, MySpace or YouTube. However, the W3C paper notes that social networking + sensors can also be used in ‘serious’ markets such as healthcare. For example, the paper suggests that collaborative rehabilitation is possible using sensor-enabled portable devices:

“More and more portable devices are supporting sensor-based interactions, from peripherals (Nike+iPod) to integrated sensors (the original iPhone made good use of its accelerometer, while the latest iPhone 3G has added various proximity and light sensors). We can make use of the Social Web and Sensor Networks to create collaborative applications for portable devices to encourage exercise, à la the Wii. As an example of how this could be done, we could begin by finding contacts on the social network with similar interests or by GPS location (e.g. using FireEagle). This social network of friends can then be used to power collaborative applications (CAPTCHAs, the ESP game, quizzes) where progress can be made by the group when a certain level of exercise has been achieved. Then, as a final step, the resulting sensor data is sent to physicians for analysis.”

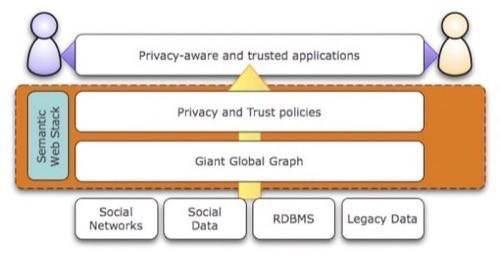

The conclusion of the W3C paper is that “the integration of sensor networks with social networks leads to applications that can sense the context of a user in much better ways and thus provides more personalized and detailed solutions.” The paper also outlines how the Semantic Web can be used to manage the interoperability between sensor networks and social networks.

Real-Time Data is Actually Useful Now

These kinds of sensor-enabled social networking applications are still far from being widespread. Citysense, after all, is limited to just one U.S. city right now (although a New York version is coming soon). But we can see how this could become the future of social networking, in a couple of ways. Firstly, for the younger generation, mobile phones will be the primary way they access and make use of their social networks. Just as kids today use Facebook and AIM and the like to organize their social activities, imagine being able to find out in an instant where all the “young and edgy” kids are hanging out in your local town on a particular Saturday afternoon by using a mobile app like Citysense or Brightkite (our pick last December for Most Promising App for 2009). Secondly, these apps hold equal promise for most other demographics, in areas such as healthcare and enterprise.

In the Web 2.0 era, real-time data has mostly been used to power fancy visualizations. It hasn’t been used extensively yet to change peoples behavior or their environment. Indeed, a current criticism of Wikicity is that it doesn’t do much more than provide nice looking charts; and hence, it’s been labeled “info porn” by some. But with the next era of web apps, we will move beyond just data being ‘visualized’ and have it start to affect peoples decisions and actions. Hopefully, this will be in a positive way by improving peoples ability to connect with like-minded folks. There are still significant technical, social, and privacy challenges to overcome though, before apps like Citysense and Brightkite go mainstream.