Editor’s note: This is the first in a three-part series by Alex Korth on privacy. In the next post, he will cover problem areas that originate from provider goals and market mechanics.

To date, we witness the mass adoption of social networks. Roughly every 10th citizen of this planet uses these services to communicate with others. For the satisfaction of human need like socialization and self-esteem, users visit these services – very often more than daily. In communication, regardless of online or offline, people put their privacies at risk for some benefit.

In the offline world, we learned since our childhood how to do this properly with respect to the culture we live in. We learned how physics of the world around us work: We know when spoken word is recorded or who can see us communicating with someone. For most given communication situations, we perceive a level of transparency by sensoring the surroundings to control the receivers for what we want to say.

For instance, we know how loud to speak in a crowded, noisy room so that only our communication partner gets us. We also know that a postal service’s personnel will be able to read a postcard we send.

To communicate in a social network, we intuitively try to adopt learned social norms from the offline to the online world. Unfortunately, the transparency and tools for control we need to maintain our privacy do not find equivalent counterparts there.

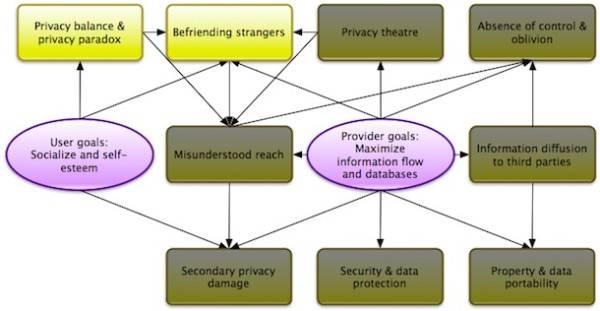

Compared to everyday communication offline, social networks bring a new party into play: the providers. The fact that providers can freely define their platforms’ rules for communication is one reason for many of the problem areas highlighted in the following. Commercial providers run social networks as an ecosystem to generate content and knowledge. That is content about users and other things like locations or photos. From that content, knowledge can generated and monetized, such as to run targeted ads. This ecosystem must be ensured to remain attractive to its users. Otherwise, they would stop to revisit it.

However, Nielson found users to vary in heavy contributors, intermittent contributors and lurkers. This inequality holds more true the harder a feature is to handle. For instance, to found and populate a group is way harder to do than to like something, which requires a single click only. Hence, providers design their platforms’ rules for information flow so that this rare, valuable content can be spread as broadly as possible. Any tool that chokes the flow of user content is counter-productive to this goal.

The users of a social network want to satisfy human needs. Therefore, we expose some of our personal information to, in return, receive a satisfaction. Like in the offline world, we need tools that provide us with transparency and control of the audience for our content. The problem is as simple as controversial: as users want to control the reach of their personal stuff, which is most likely a limiting need, providers want to spread user-generated content as wide as possible to keep up the heartbeat of their products.

In this three post series, I want to deal with the problem areas involved in this field. As highlighted in the below graphic, this post concentrates on the users’ decision-making process as well as their behavior as to befriending other users and its consequences.

1: Privacy Balance and the Privacy Paradox

The privacy balance is something we control all of a sudden and both online and offline. As Alan Westin observed, every time we are communicating or in public, we make adjustments between our needs for solitude and companionship, intimacy and general social intercourse, anonymity and responsible participation in society, and reserve and disclosure. In the online world, examples for privacy balance can be found in e-commerce applications: users expose selected personal data, such as credit card details and our postal addresses, for the benefit of not having to leave their homes to shop goods.

The privacy paradox kicks in when the satisfaction of human needs, such as belonging, self-esteem and respect by others, gets involved. Research has shown that users who claimed to protect their privacies, at the same time acted against their stated concerns by switching off privacy preserving controls or massively exposing private information. We are distorted in our decision making process. Subconsciously, we trade off long-term privacy for short-term benefits.

2: Befriending Strangers

In most social networks, mutual access to personal information of two users is granted if they are befriended. So far so good; friendships can be considered a proper and intuitive means to control the reach of personal information and content. However, there are three drawbacks relevant here:

- Firstly, in most social networks friendships are not qualifiable. That means that we are able to control future information flow only in a binary way: either there is access granted, or not. There is nothing in between to, say, private stuff can be addressed to one group of friends, and business content to another. Even if a social network lets you group, tag or define lists of friends: do you actually use there features? Most don’t.

- Secondly, trust in online systems has been shown to be of lesser perceived necessity than in face-to-face encounters, encouraging people to befriend strangers as a result of disembodiment and dissociation. The problem is missing feedback functions or a reminder that future information flow is received by these strangers.

- Thirdly, providers exploit the second point to encourage users to befriend others. By applying principles like game mechanics, they provide an easy way to collect friends and satisfy our need for self-esteem and social inclusion while at the same time paving ways for a broader future spreading of content.

How did you like the first two of nine problem areas we described here? Did you notice the problems and pitfalls by yourself? Please share your thoughts in the comments!

Photo by rpongsaj