Over 35 hours of video are uploaded to YouTube every minute, so it’s just not feasible that YouTube review each video before it’s posted online. Instead, YouTube points to its community guidelines and lets its users flag those videos with inappropriate content for removal. And now, a new category will let you flag ones that “promote terrorism.”

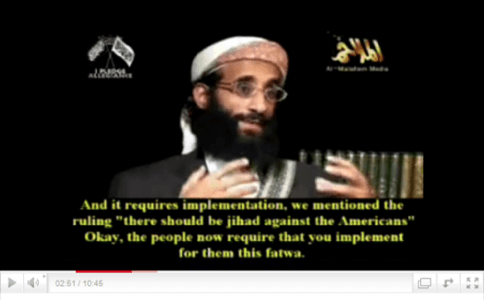

YouTube has come under increasing fire recently from governments that demand the company do more to pre-screen videos that may contain terrorist propaganda, according to a story in the Los Angeles Times today. In November, YouTube removed hundreds of videos featuring the American cleric Anwar al-Awlaki after the British and U.S. governments protested his appearance in more than 700 videos with over 3.5 million page views, claiming that the videos were linked to specific acts of violence. But even after YouTube’s official removal of the videos in question, other copies and related material remained.

Prior to this new flag, YouTube videos were already pegged for removal for containing hate speech and inciting dangerous acts. The community guidelines read that, “While it might not seem fair to say you can’t show something because of what viewers theoretically might do in response, we draw the line at content that’s intended to incite violence or encourage dangerous, illegal activities that have an inherent risk of serious physical harm or death. This means not posting videos on things like instructional bomb making, ninja assassin training, sniper attacks, videos that train terrorists, or tips on illegal street racing. Any depictions like these should be educational or documentary and shouldn’t be designed to help or encourage others to imitate them.”

So YouTube has long forbade materials that incite others to commit violence, but this new category of “promoting terrorism” now makes it a more complicated judgment call. George Washington University law professor Jeffrey Rosen says the new category is “potentially troubling” as it’s such a subjective interpretation. Removing al-Awlaki’s videos calling for the killing of Americans may be an easy decision to make, but drawing the line between free speech (particularly religious free speech) and terrorism might prove more difficult down the road. It’s up to users to flag which videos they think promote terrorism, but now YouTube is going to have to decide what “promoting terrorism” really means.

Image via YouTube, Wired