The Velocity conference took place earlier this year – and some of the best and brightest in Web performance assembled in Santa Clara to chart a course of innovation in performance and operations for the next year.

One of the obvious themes this year was mobile, but it was the overarching mission of the conference that struck the truest chord: to take a more holistic view of the performance landscape, the 50,000-foot view, and think about it in a way that accounted for the relative complexity of a given operational environment, one that is now marked by “anywhere access” on a compendium of devices and platforms – mobile or otherwise.

Guest author Ed Robinson is co-founder and CEO of Aptimize, a company that produces software to accelerate websites. He is responsible for Aptimize’s business growth, revenue, product marketing, product management, and strategy. Ed has 20 years experience in the Information technology industry in both the United States and New Zealand.

But performance has long been perceived solely as a network issue, and it’s because of this narrow focus on the networks that we have gotten to a place where few understand the various layers at which our applications and websites desperately need performance improvements.

One Step Forward, Two Steps Back

In the last ten years the Internet has exploded with content: websites, intranets and Web-based applications, all coming together in an uncontrollable, rapid way. On the flip side of that our networks have become incredibly fast. But the sites are growing faster than the networks are accelerating.

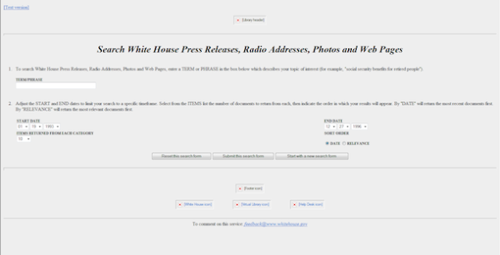

For example, if you take a look on the Wayback Machine, this is what the Whitehouse.gov site looked like in 1996.

The home page is incredibly simple. In fact, it’s so simple that it likely reminds you of the Google home page today – built for simplicity and speed.

Fast forward to today and this is what the White House website looks like:

In 14 years, the Whitehouse.gov site has emerged a richer site in every respect. It’s more engaging, has more information, more graphics, more data. The ability to design these sites has advanced, and our expectations of what a website should be have grown with it.

But here’s the kicker – the site loaded in about the same amount of time in 1996 as it does today.

In 1996, the average speed of an internet connection was 50Kbps. In 2010 the average speed of a broadband connection is 3.9Mbps – an increase of over 74 times. In theory, this should mean that the internet is 74 times faster than it was in 1996.

The current Whitehouse.gov webpage has grown from 22 KB in 1996 to 1.2 MB in 2010 – an increase of 54 times.

With the effects of latency and distance, the load times of the page are about the same in 1996 as they are in 2010.

In other words: We have much better infrastructure, but the website has become so bloated that it chews it all up. We have the ability to deliver more content in the same amount of time as 1996, but we’re doing it very inefficiently. End users haven’t experienced any true acceleration in load times.

The fundamental technologies that deliver web pages actually haven’t changed much at all. Yes – HTML5, the fifth major revision of the core language of the World Wide Web, is nearly complete and many of the mainstream browsers like Firefox and Internet Explorer have already adopted it as standard.

But the nature of how the browser processes the HTML files, JPEG images and other components of a webpage are still fundamentally the same. The way the browser connects to the host server and downloads the contents of the page has changed very little.

The importance of optimization therefore becomes obvious. If you look at Google’s home page it loads fast from everywhere in the world, and the reason is simple – it’s almost a blank page with a simple textbox on it.

But how can we make more content-centric and engaging sites like Whitehouse.gov load as fast as Google? This is where the optimization industry comes in. By optimizing websites for improved performance, the Internet performs faster and feels faster than it did in 1996.

Optimization is the X-factor. It’s the only way that we can make better use of infrastructure. Regardless of how much network capacity we add, it’s clear that we are going to eat it up with larger websites, more video and richer content. The truth is that the optimization market is exploding because throwing more horsepower at the problem is not going to solve it.

Look at the 500-foot View

We have been far too focused on network-level optimization for too long. While the industry has kept its gaze on network innovation, the Web has suffered from content bloat, chatty code and ever-increasing round trips which have held Web performance back.

But the problem is, most Web designers, webmasters and IT managers still see optimization as an infrastructure-level function. The variety of optimization tools available today is impressive, yet we spend most of our efforts discussing broadband speeds, fiber and network optimization – which comprises just one slice of the performance spectrum.

Until we collectively accept that there’s a fundamental problem with the narrow way in which we think about delivering content and applications over the internet, we’re going to continue on the course of an Internet that loads sites at the same speed it did in 1996.

This is an awareness problem – there needs to be much more clarity around the various layers at which Web performance can be impacted.

We break optimization into three main categories:

- Network Optimization

- Website/Application Optimization

- Website Acceleration

As I mentioned, we focus almost 100% of our conversations about Web performance on the network. When site loads in 25 seconds we naturally assume that our Internet connection is struggling, that our modem is clunky or that our cable company is unreliable.

The reality is that it’s far more likely that the site itself (on whatever server it’s sitting on) is bloated, has chatty code and hasn’t compressed files or is using very large images. Furthermore, the CMS might be suffering from its own performance issues.

Our network speeds are roughly 74 times faster than the averages in 1996 – so we need to look elsewhere to determine the problem areas. This diagram spells out the core areas where performance can be impacted (either improved or causing slow-downs).

This is the 500-foot view you need to have in order to truly address performance issues. If you can zoom out from your focus on the network, you will start to identify an ever-increasing series of potential bottlenecks that are slowing your site down. A truly effective optimization strategy takes a holistic approach – accommodating for improvements from end-to-end on this spectrum.

Sir, Step Away From the Network

If you’re finally going to get a handle on your performance challenges, the first step is to take a step back and see the forest for trees. What you are sending across the network, and how you send it, matters as much as the network itself.

Performance challenges will never exist in convenient silos, and our approach to dealing with them should account for that. By zooming out from the network view and seeing the complete process by which your application or website is delivered to a user, you will find much greater opportunity to improve performance, please your users, and even drive better revenue for your business.

The alternative is to continue to throw network horsepower at the problem. That’s a perfectly viable strategy – for those of you hoping to keep up with the Web speeds of 1996.

But if there’s one thing to take away from this year’s Velocity conference, it’s that a lot of very bright engineers and developers have no desire to build sites and applications that remind them of 1996.

Photo by jamesdcawl