Two weeks ago, Facebook has announced a major new initiative called Facebook Open Graph. This is an attempt to not only re-imagine Facebook, but in a lot of ways, an attempt to re-define how the Web works. We wrote in details about the implications of this move for all interested parties.

A big part of the announcement is Facebook’s vision of a consumer Semantic Web. In this new world, publishers have an incentive to annotate pages by marking up activities, events, people, movies, books, music and more. The proper markup, would in turn, lead to a much more interconnected Web – people would be connected with each other across websites and around the things they are interested in.

Directionally, this vision is both correct and important. We’ve been talking about pragmatic approach to the Semantic Web for sometime, and we’re excited at the possibility of it finally happening. Yet, two weeks after the announcement it is becoming more and more apparent that there are gaps in Facebook’s offering and intentions. A close look reveals that perhaps Facebook’s intent is not to make the Web more structured, but instead to engineer a way for more data – mostly unstructured – to flow into Facebook databases.

“Instead, it appears that semantics is an afterthought in the race to capture user identity and information, in exchange for sending publishers the traffic.”

As you will see from the rest of the post, it appears that getting semantics right has not been a big priority for Facebook, at least not prior to the announcement. Here are the issues we identify:

- Open Graph Protocol does not support object disambiguation

- Open Graph Protocol does not support multiple objects on the page

- Launch partners have not implemented Open Graph Protocol correctly on their sites

- Facebook does not have the markup on its own pages that it asks the world to adopt

- A growing amount of user profile data is full of duplicates and ambiguity

Concerns with Open Graph Protocol

A week ago, we complimented Open Graph Protocol for its simplicity, but upon closer look we are seeing a couple of flaws. First of all, there is no way to disambiguate objects. For example, two movies that have the same name would be considered to be the same movie. A proper way to deal with this sort of thing is to introduce secondary attributes like director or a year that can help identify specific object, but the protocol does not define secondary attributes.

The second issue is that there is no way to markup the objects inside the page. In its current version, the protocol only supports declaring that entire page is about a person, a news event, a musician or a movie, but there is no way to identify objects inside the page. This is a big use case for bloggers and review sites – each blog post typically mentions many entities, and it would be nice to support this use case from the start.

Both of these shortcomings are easy to correct. The nice thing is that the protocol is simple and minimalistic, so adding the bits to handle disambiguation and multiple entities is straightforward. The other things that we are going to discuss, are much more troublesome

Launch Partners – Why No Markup?

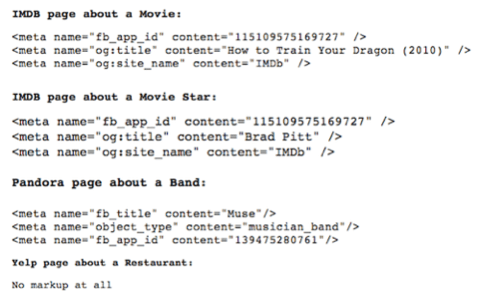

The truism of making the Web more structured is adding more markup. No matter how limited, having markup on the pages is always better than not having it. When Facebook announced the Open Graph Protocol, it highlighted several sites that are already using it. Among them were Yelp, IMDB and Pandora. We took a look to see how exactly these sites are marking up their pages. What we found is rather surprising – none of these sites implemented markup correctly. We looked at the How to Train Your Dragon movie on IMDB, Brad Pitt’s page on IMDB, the Muse page on Pandora and the Acquagrill page on Yelp.

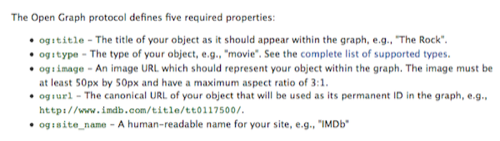

This is what Facebook defines as required properties:

And this is what we found on the actual partner pages:

So what does this mean? It means that Facebook implemented special handling for these sites. When a user likes a movie on IMDB or when she likes a movie star, Facebook can’t really tell the difference since IMDB is not passing correct information via the protocol. The only reason it works is because IMDB is explicitly hard coded by Facebook.

The roll-out for launch was not generic, but custom, targeted more towards PR than correctness. Why would Facebook allow this instead of having partners implement correct markup is unclear. It is so easy to implement, and the partner pages already have all the necessary information. We conclude that enforcing correctness was simply not a priority for the launch.

Eating Your Own Dog Food

As it turns out, not only did publishers not markup pages – neither did Facebook. At the time of writing of this post, none of the entity pages on Facebook.com have Open Graph markup. So much for being open – Facebook’s own pages remain closed. Ironically, it might not be because the company does not want to markup the pages, but instead that it can’t. At least not yet.

Figuring out what is on the page is actually not a trivial problem. This is what semantic technologies that Freebase, Powerset, Open Calais, Evri, Zemanta and GetGlue, among others, have been building over the past several years. To be able to markup the pages correctly, especially the ones created by the users, Facebook needs to run them through a sort of semantic processing and disambiguation. This isn’t a trivial matter.

Unstructured data on user profiles

All of this comes full circle to impact the users. As the Like buttons spread through the Web, so is the unstructured, duplicated data spreading through user profiles. Absence of semantics creates fragmented connections and noise around the Web.

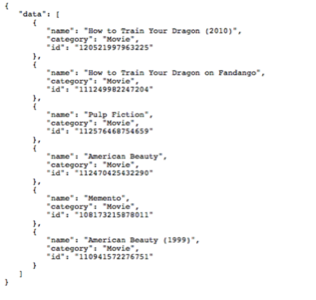

Below is the listing of movies that I liked and fetched via Facebook Open Graph API. How to Train Your Dragon shows up twice, because I liked it once on IMDB and then also on Fandango. Friends that are see on Fandango page are different from the ones I see on IMDB. And worst of all, all this uncleaned data is showing up on my profile – movie title contains a year in one case and the originating site in the other case.

So right now Facebook does not correlate things across sites. Instead, it just captures the information as is, hoping to maybe clean it up later.

Conclusion: A different goal?

All of these facts when added together lead to the obvious conclusion: Facebook’s goal is not to create a better, more structured Web. Instead, it appears that semantics is an afterthought in the race to capture user identity and information, in exchange for sending publishers the traffic.

As more and more data flows into Facebook via the Like buttons, Facebook and publishers are getting the benefit of recycling friends through the content on sites around the Web. But at the same time, the data in user profiles is becoming more and more noisy. Since not as many users are paying attention yet, it just looks silly under a closer inspection.

But to be able to power recommendations, to make social plugins a success and to facilitate good user experience, Facebook will literally need clean up its act. Duplicate and dirty data will be a big turn off for the users, and the longer this problem goes on the more difficult it is going to be for Facebook to deal with it.

We will see in coming weeks and months how the social networking giant will handle this issue. In the mean time, we’d like to reverse the tables. Please tell us what do you think about Facebook’s semantic Web ambitions. Should they have gotten the core bits right first before the launch, or is this fine and they will be able to quickly catch up?

Disclaimer: Alex Iskold is a founder and CEO of GetGlue.com, a social network for entertainment. GetGlue developed the ability to connect users across different sites through a combination of browser addons and semantic databases in the cloud.