Social news sites such as Digg, Propeller, Reddit, StumbleUpon, where the community decides what content is worthy and what content isn’t, are powerful enough to drive tens of thousands of visitors to some lucky content producers, and thus have become an incredibly valuable marketing platform. One good day on any of these sites can get you more than 60,000 visitors in less than 24 hours.

A great byproduct of the demographic of these social news sites (many of the users are content producers themselves or own/run popular blogs) is that once content is promoted to the front page, it is usually written up about and commented on by hundreds of other bloggers who link to the initially promoted page. The end result is that not only do you get that initial burst of tens of thousands visitors but, because of all the other people linking to you, you fare prominently in search engines and get more long-tail traffic as well. The second reason is why some search engines are presumably, but not necessarily rationally, irked.

However, this isn’t about Google’s apparent plan to penalize sites that frequent the front-pages of social news sites and how any decisions to lay such a penalty would diminish everyone’s experience (good content is good content). This article is about how the next evolution of social news sites needs to be a much more important role for and much more deeply integrated search functionality, and why traditional search engines need to understand this role of socially driven content sites and work with them not against them.

Every single social news site not only needs to integrate with search engines (like StumbleUpon and Del.icio.us do) but also needs itself to be a social search engine. Similar to social search engines like Searchles (that uses tags and saves much like Del.icio.us) and a multitude of others, but with robust social networking features and the ability to catalog all the most popular content and make it searchable (and contextually retrievable) based on user submitting/voting/commenting habits as well as relationships within the system. Not to mention an emphasis on the two different actions, “bookmarking” for future reference and long-tail importance as well as “voting” for real-time relevance but not necessarily long-term value.Let me explain.

Google determines the importance of a page based on it’s associated PageRank.

PageRank capitalizes on the uniquely democratic characteristic of the web by using its vast link structure as an organizational tool. In essence, Google interprets a link from page A to page B as a vote, by page A, for page B.

Similarly, social news sites function when “people” (rather than pages) are voting for pages using “actual votes” (by whatever name) rather than using links as other web pages do. Though they may not use the term PageRank for this process, all social news sites they have their own algorithm they use to determine the importance of a page and whether or not to promote it on the front page (and once on the front page, whether it is important enough to be a top story of the day). In effect, search engines as well as social news sites are both markets for the most important and most relevant information on topics, whether you are searching based on key terms or general categories – although the latter tend to be more transient and the former tend not to have a community or a “popular-now” aspect to them.

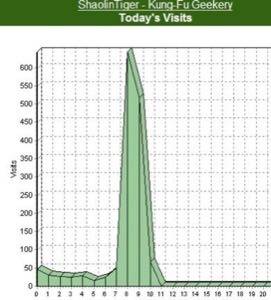

Ultimately, however, what differentiates social news sites from search engines is that even though they are both routinely indexing information and the goal of both is to show the best information first (based on PageRank or algorithms), social news sites focus too much on present-day and do an incredibly poor job of indexing information for future retrieval. You can see that in practice if you look at traffic statistics for any story made popular on one of these sites. You will see a massive spike that diminishes by 50% every page you move forward, but is essentially 0 as soon as you’re 3-4 pages deep. And search is generally so poorly implemented (for example, you can only search by title, summary, or url, and guesswork is usually futile) and search algorithms are nearly useless in performing in any way intelligently, that the general user mentality is to check a few pages deep and what’s not there is irrelevant.

Now, think for a second about a social news site and its entire index of popular content (as well as not-so-popular content) along with a search feature that works. What you get is a social news site becoming a smaller, but much more focused, search engine of its own. And why shouldn’t it be? It’s a search engine that only returns the best results (as determined by you, the community, based on one user one vote), can return them based on time frames (popular right now versus popular-when?), can easily filter between trusted and not-trusted sources (based on popularity and how many times the community has buried content), and completely behaviorally-target your results and make superior recommendations (based on your past submitting, voting, commenting, and searching habits) all from the same place.

The expectation of the socially driven content sites has evolved in terms of what people want to use them for, but the sites themselves haven’t evolved fast enough to provide usable infrastructures to allow this kind of interaction. These sites aren’t just for bookmarking information or just for breaking news stories anymore. Social news sites are decision markets for determining what content (audio, video, pictures, or text) is good (based on popularity, an unfair measure of quality) and bringing that information to the masses not just as a one-time thing , but to highlight good content above all in an index of it’s own (imagine Digg Content Search, with its own refined index). In fact most of the content on these sites nowadays isn’t actually news, it’s rich media or commentary that isn’t time-sensitive and certainly deserves to be archived for easy future retrieval.

Of course it would be foolish to think that implementing search will fix all the problems on social news sites, but it is one of the more important things that needs to be implemented (along with recommendation engines) if the socially driven content experience is to be progressed further. An addition of robust searching methods would actually solve numerous issues on the site as well. For example, there doesn’t need to be a 24-hour limit on content shelf-life. StumbleUpon does a good job of maintaining attention towards good content but even then problems with searching (the StumbleUpon index, not StumbleUpon results in Google) persist. If at any point enough people are searching for and voting on a specific piece it can be promoted. Also, if search on these sites is fixed, the duplicate content problem can virtually be eliminated, all the votes can be aggregated to one source and the right content can be made popular. Finally, good search ensures that stories are linked well together and related content or related recommendations can be easier to find (or people can browse content based on current interests of the community as shown in search, almost like a combination of Stack and Swarm but actually useful).

Conclusion

Social search is the future. And socially driven content sites – with their massive indexes of websites, huge communities and decent social networking features, and ample information on user habits and preferences (based on past behavior) – are in the best position to take the lead. Ultimately, imagine a combination of Digg, Google, Facebook, and Techmeme. To the minute, timely content, large index and approximately 20,000 or more new submissions indexed per day (this works fine for a smaller, Mahalo-level search engine), the best content is promoted to the top by an active community of millions of networked users, and conversation-mapping based on content promotion, comments, and search habits on the site.

This is a guest post by Muhammad Saleem, a social media consultant and a top-ranked community member on multiple social news sites. You can follow Muhammad on Twitter.